The answer seems simple, yet we have discovered that fear comes in many shapes and manifests itself in different ways. I learned that children access and express their fears more readily than adults, who have more complex ways of explaining this emotion. Unsurprisingly the older you get, the more ideas you will have on the meaning, the roots and the function of fear. On another note, when we try to describe the word and its meaning within an academic context we will see that a vigorous debate concerning its meaning has been playing out in different fields such as neuroscience, science, psychology, education etc. Scientists have been trying to define this emotion for decades, yet there is not one correct answer. There are many different theories and ideas on the topic. Theses and hypotheses on the idea of what fear is have been constructed by people in the field of science, as well as deconstructed over time, retracting their own theories and sometimes declaring them as wrong.

I am introducing three of the most influential contemporary scientists discussing their perspective on fear. Further I will give a slight inside on three theories of the most influential contemporary scientists discussing their perspective on fear.

Joseph E. LeDoux, who is an American neuroscientist whose research is primarily focused on survival circuits, including their impacts on emotions such as fear and anxiety.

Ralph Adolphs, who is an American Bren Professor of Psychology and Neuroscience and Professor of Biology, studies the neural and psychological basis for human social behavior.

Kay M. Tye who is an American neuroscientist and professor in the Salk Institute for Biological Sciences.

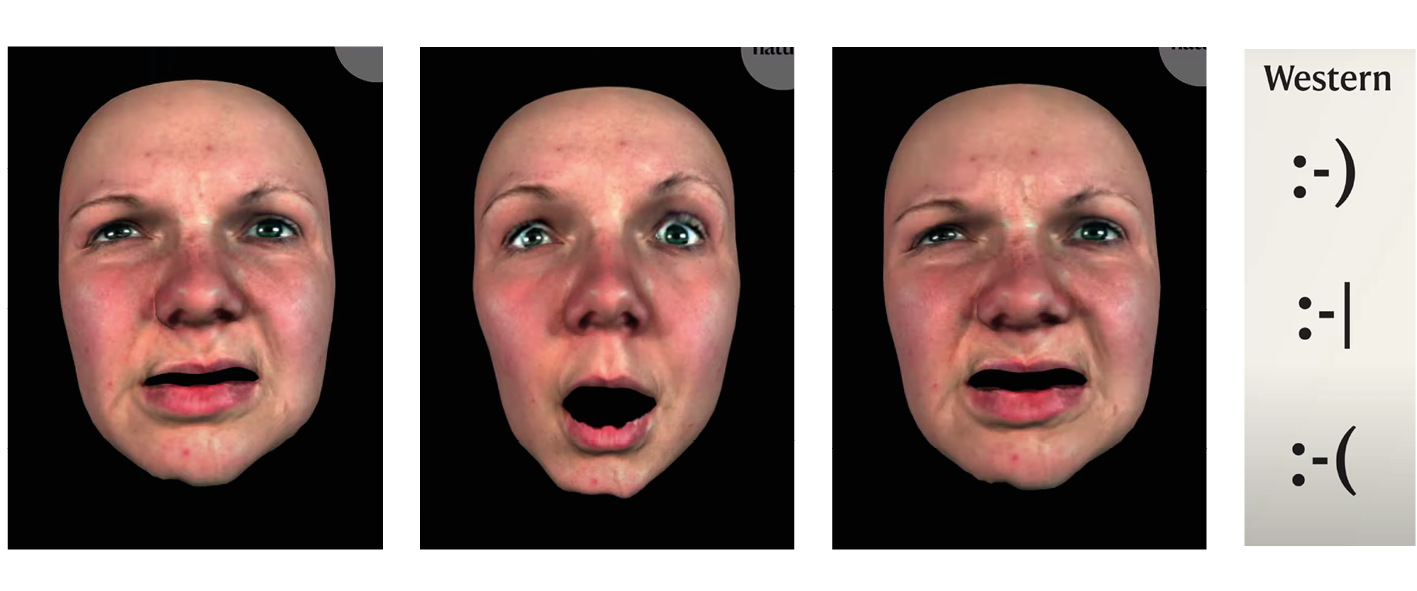

Looking at three very different opinions on the topic, it becomes clear that there are many contrasting and quite subjective notions on what fear is. One might find it difficult to draw a conclusion, when one is overwhelmed by the diversity of the answers. For example Joseph E. LeDoux makes his opinion clear that “it is not fear which can be seen as universal, but danger. And this experience of being in danger is obviously very personal and unique.”5 I am mentioning his ideas, since I agree to an extent with his approach. In my opinion fear is an emotion which is experienced all over the world, even though it is in many different ways. Ralph Adolphs states that: “fear is a psychological state with specific functional properties. Science is going to revise this picture and it will show that there are many different kinds of fear, that depend on a variety of neural systems.”5 One of the theories I find most interesting is from Kay M Tye.She says that: “fear is an intensely negative internal state” and that “it resembles a dictator that makes all other brain processes its slave.”5 What a strong message.In the next chapter I will introduce the power relations of religion and new established structures, such as AI and other technologies. The reason I am talking about this in my thesis is simple: Our Christian institutionalised Western world is still very much influenced and built upon the stories written in the Bible: The garden of Eden, Adam and Eve and the Fall of Man. They are origin stories discussing fear and temptation, anger and the devil. I am questioning this given system and want to highlight the relevance of new imposed power structures such as AI.