I can feel it damaging my eyesight. I’m trying to look away but I keep on staring at the surface of the screen, not sure of what I’m seeing. Familiar, yet disturbing. My intuition tells me that these images want something from me, from you, from all of us. Coming from a place where nothing happens by chance, they are seeking for my attention, they want me to listen to them, they want me to trust them

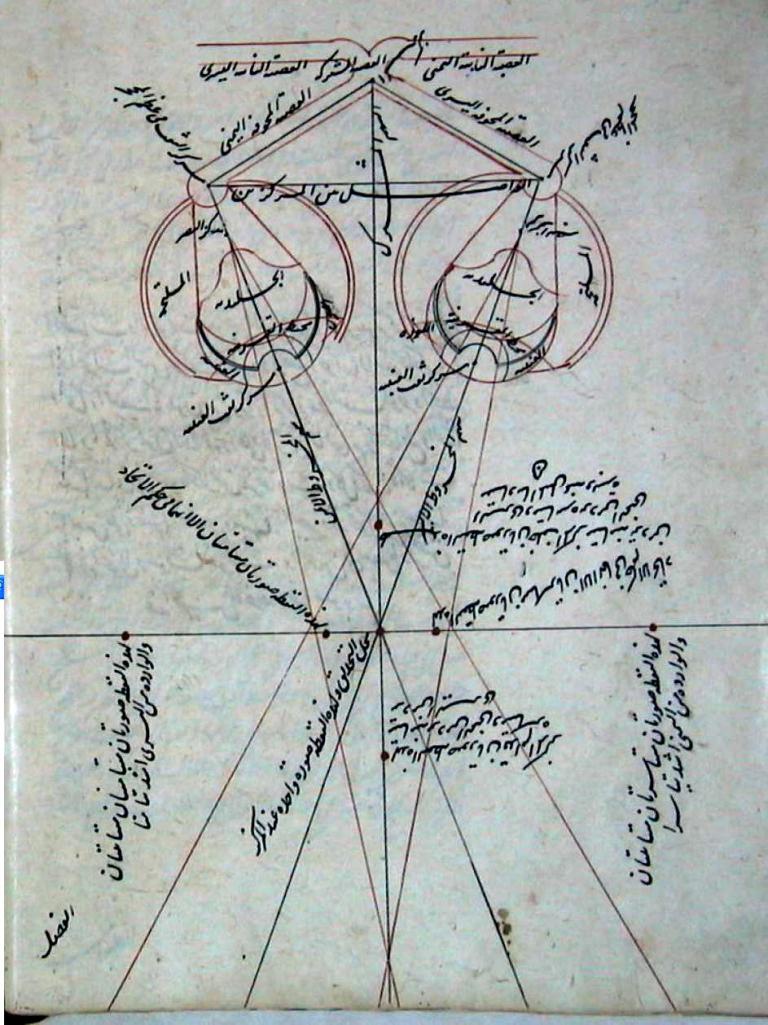

After a primary scientific understating of how the human eye perceives visual phenomenons, established by Ibn Al Haytam in his book Kitab Al-Manazir or Book of Optics written in 1028, artists tried to represent them accurately with the invention of linear perspective during the Renaissance. Joseph Nicéphore Niépce later achieved the capture of these phenomenons through the lens of the first camera during the 19th century, creating the illusion of a three dimensional space within which things appeared to exist the same way our eyes see them. From pigments to light, from light to 1’s and 0’s. Computer Generated imagery technologies now allow us to simulate reality, displayed by a constantly increasing combination of pixels and frames per second on the surface of our screens.

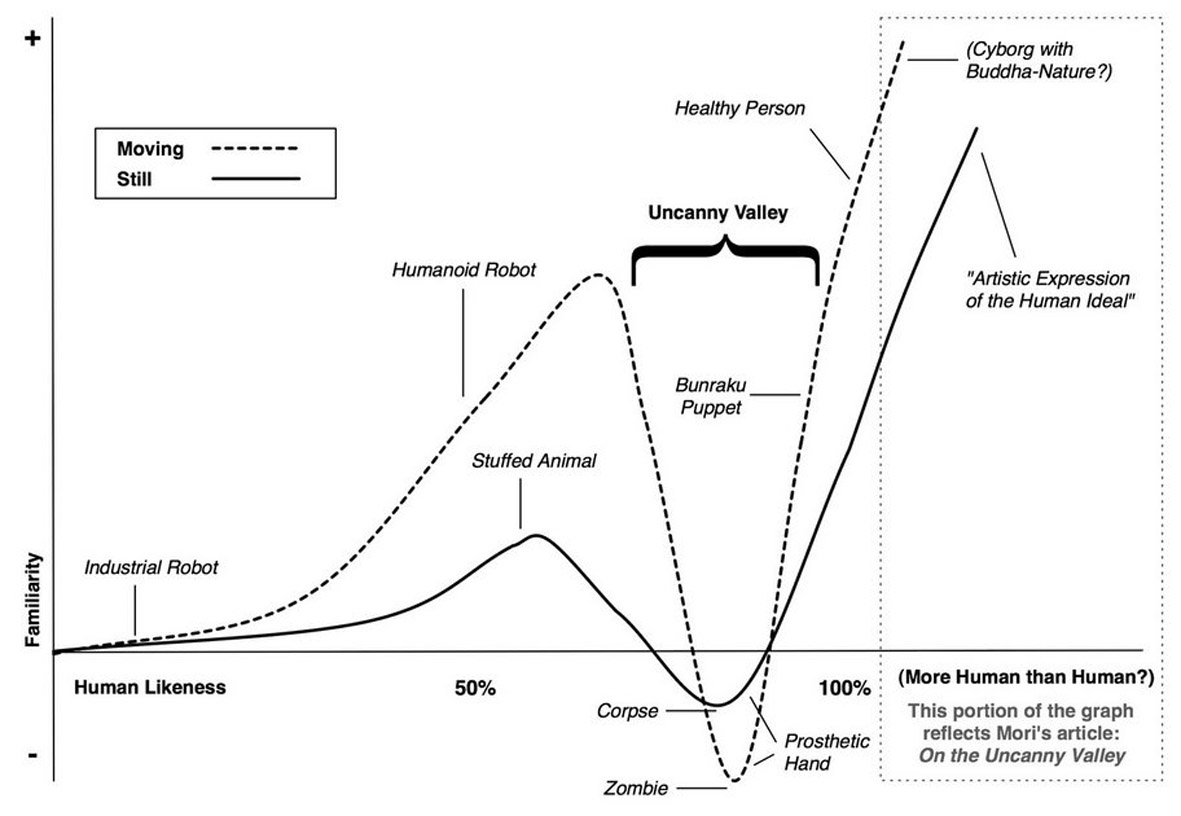

The uncanny valley—concept developed by Masahiro Mori, Japanese professor in robotics in his research on human emotional responses to non-human entities—is now far behind as Alan Warburton, would argue in his movie Good Bye The Uncanny Valley. The resolution of our screens as well goes far beyond what our eyes can perceive but we keep on developing these technologies feeding the needs of the entertainment industry for more realism. André Bazin—French cinema theorist of the 19th century and author of the book What Is Cinema? would divide that so-called realism in two categories based on opposite tendencies observed in the 20th century cinema. The first one would take shape as a significant expression of the world in its essence when the second has as only purpose to deceive, fool the eye and the mind with illusory appearances.

“You can feel this very strange force that since the beginning, CGI are in competition with the cinematographic and cinematic images. Just like socialism wanted to defeat capitalism, they want to defeat these images and are probably on the verge to defeat them right now” stated the late filmmaker Harun Farocki who passed in July 2014. Computer Generated Images, constructed and designed to serve likeness or illusion as a main function progressively proliferate, a phenomenon observable in the realm of politics, advertisement or in the entertainment industry for the past 30 years. According to Farocki, we are facing the rise of computer-generated imagery, on its way to become the dominant image since current technologies allows us to simulate every single piece of our environment. Observing a feed-back loop pattern between representations and their subject through history, we can only wonder what realities or effect these technologies will produce in their attempt to achieve a more accurate visual representations of our world?

Not only providing us new tools for image production, digital technologies transformed our understanding of reality while changing our relationship to fabrication and circulation of images channeled by social networks, online video platforms and mainstream media as Erika Balsom: critic based in London, working on cinema, art, and their intersection reminds us during her keynote presentation: Rehabilitating observation—Lens-Based Capture and the 'Collapse’ of Reality' in Amsterdam, for the 2018 edition of the festival Sonic Acts, emphasising on the importance of documentary practices in a time of planetary crisis—Post truth era, where holding back on reality seem to be an emergency.

“For Hollywood, it is special effects. For covert operators in the US Military and intelligence agencies, it is a weapon of the future. Once you can take any kind of information and reduce it into ones and zeros, you can do some pretty interesting things.”

Daniel T.Khuel—When Seeing and Hearing Isn’t Believing. Washington Post, 1999

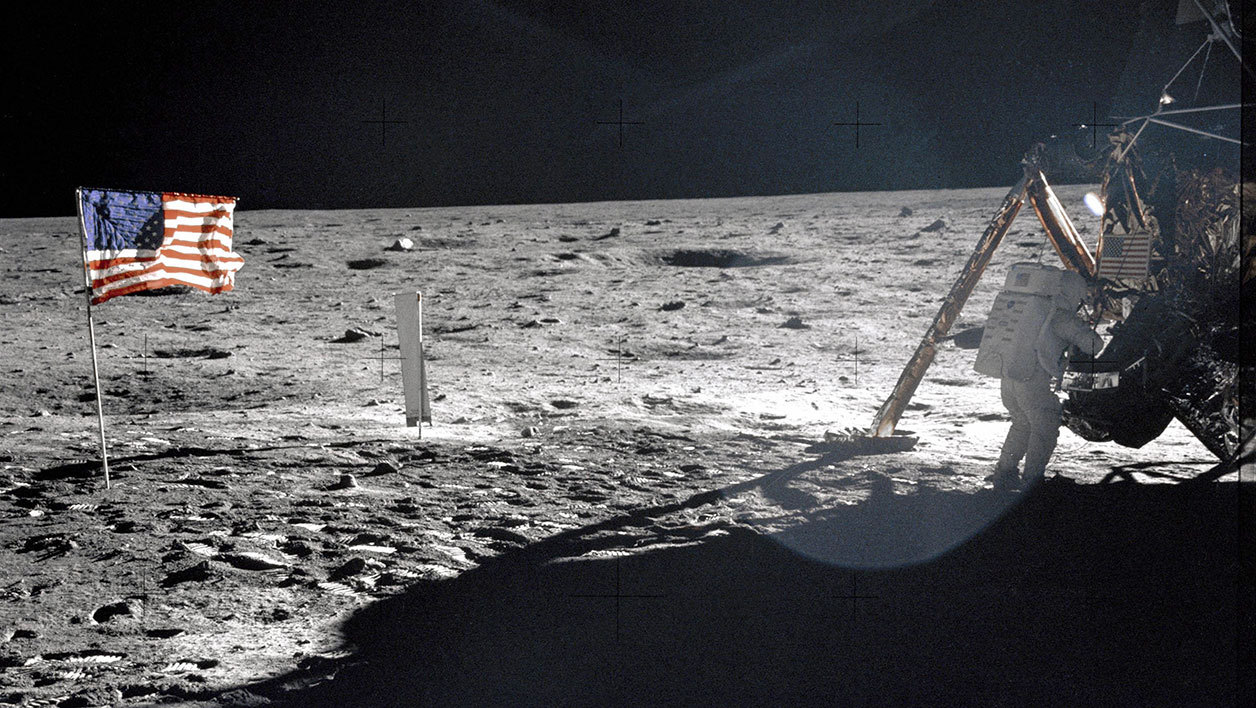

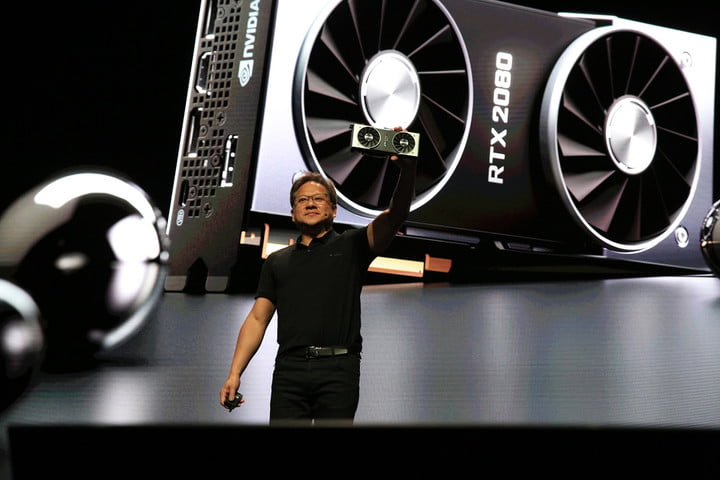

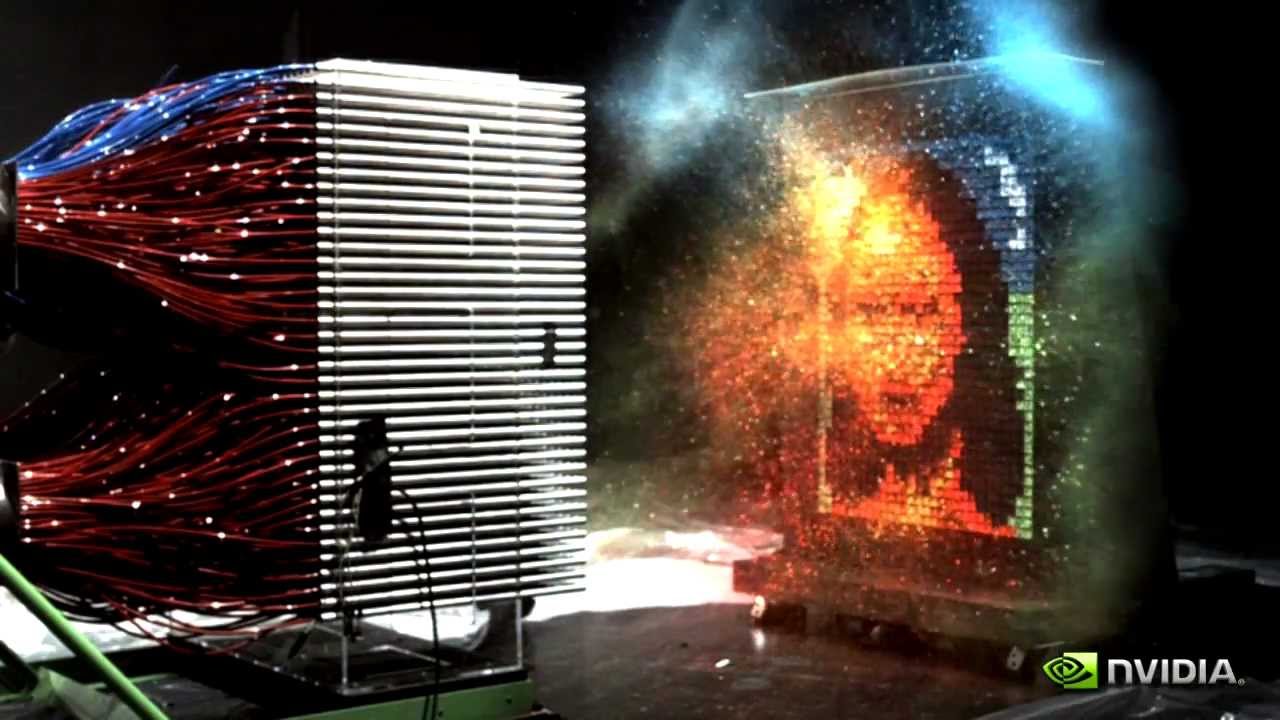

In December 1972 after $25.4 Billion were invested on the Apollo program, US Citizens facing their CRT TV could witness Eugene Cernan as the last man walking on the moon before his return to Earth on December 19th after a mission of 12 days, reinforcing once again the idea of a “technological superiority” of the US over the USSR. These images have already been subject to conspiracy theories and suspicion on wether they were shot in a studio for now a few decades. During the same year, A Computer Animated Hand, a 1 minute movie produced by the computer scientist Edwin Catmull—now president of Pixar and Walt Disney Animation Studios—and Fred Park for their graduation at the University of Utah was just released. One of the earliest experiments on computer-generated imagery, made of 350 digitised polygons to generate a simplified representation of Edwin’s hand surface. The hand was animated in a program Catmull wrote that would later set the standards of today’s 3D software technology.These two events had a new echo coming together once again a few months ago when Nvidia—technology company based in Santa Clara, US—leader in the industry of graphic cards manufacturing since its invention in 1999 recently published a video to promote the new architecture of their products now allowing real time ray-tracing rendering. During the launch of their RTX series, Nvidia’s founder said “using this type of rendering technology, we can simulate light physics and things are going to look the way things should look.” In this video, they will achieve a complete computer generated re-enactment of the moon landing in a game engine called Unreal in order to prove the authenticity of the Apollo Mission images, published in 1972. Their project unconsciously underlines the ambiguous relationship we now have to the images presented to us when contemporary image production technologies are used to dismiss the doubts they create in a context where the most of us apparently can no longer able make the difference. A fact accidentally proven by Kim Laughton and David O’reilly and their common project.

Kim Laughton and David O’reilly are two CG Artists respectively based in Shanghaï and Los Angeles. Through the online blogging platform Tumblr, they collaborated on a project titled #HyperrealCG: A collection of banal images as still life, landscapes, portraits and other objects, always followed by captions providing more information about their authors and the softwares used for their production. Its purpose was to showcase “the world’s most impressive and technical hyper-real 3D art”. The images published on the website were in fact photographs collected while browsing on the web or taken by Laughton and O’reilly themselves. Their enterprise was meant as a comment on fellow artist driven by the desire of achieving photo-realism in their work, considering this parameter a sign of artistic quality. The Huffington post published a click bait article titled “You Won’t Believe These Images Aren’t Photographs” and later apologised re-titling to “You Won’t Believe These Images Aren’t Photographs, Because They Are Photographs”. #HyperrealCG started spreading over the web. Eventually the two artists revealed their intentions through their twitter account. Making a good joke but “not trying to fool anyone”.

We are experiencing an unprecedented crisis regarding the trust citizens nowadays have for professional news media since alternative facts, post-truth and fake news became part of our daily language partly promoted by politics like Donald Trump since his election in 2016. Computer generated imagery and artificial intelligence technologies are getting better at creating convincing illusions. Meanwhile, its use by hoaxers and propagandists to generate fake or doctored content is reinforcing the doubts we can have regarding the authenticity of visual media.

In 2017, Eliot Higgins, the funder of Bellingcat—cloud based organisation leading international investigations—discovered that still images extracted from AC-130 Gunship Simulator: Special Ops Squadron’s promotional video had been shared by the Russian defence ministry on twitter. The release of the Images was intended as a piece of evidence, proving the collaboration of the United States with the Islamic State of Iraq and the Levent—ISIL. The post was complemented with a statement: “The US are actually covering the ISIS combat units to recover their combat capabilities, redeploy, and use them to promote the American interests in the Middle East.” A Low resolution, black and white view from above, overlayed by a crosshair in the screen’s middle where the player opens fire on its targets. The Interface of the game vulgarly replicates the aesthetics of military drone monitors, enough to tell us a little more about our lack of understanding when it comes to identify what we are looking at.

Computer generated imagery seems to be perfectly suited for weaponisation in an age of untrustworthy images where deep fake videos are already over the internet, you can ask Nicolas cage. Artificial intelligence recently allowed us to put words in the mouth of anybody. Far from Josh Kline’s Hope and Change face substitutions also made through the use of machine learning a few years earlier, in Synthesizing Obama, published in 2017 by Supasorn Suwajanakorn—researcher from the University of Washington’s Graphics and Imaging Laboratory—one image was enough to simulate a three dimensional facial model even though more footages were necessary to reproduce the different imperfections and wrinkles that each facial expression would generate. To finish with, “a simple averaging method” would sharpen the colours and textures allowing us to get a fully controllable facial model we can now drive using any video as an input. Stanford University already developed another program with the same purpose, in this case the results were rendered in real time using a webcam only as input. Similar open source algorithms are now available under MIT—Licence, free of use, on platforms like Github. However, generating images through the use of machine learning is not so much of an easy task either and doesn’t happen over one click on a button. A basic understanding of programming at least is still required and the hardware should follow. Unfortunately, the accuracy of the results produced by these technologies are only a part of the problem just as much as its costs or technicalities. Its very existence is a new source of doubts in a time we could already qualify as uncertain. A climate of ambient and collective paranoia reinforced by conspiracy theories adepts of all kind that may not forever stayed confined to the darkest areas of the web.

After that Julian Assange, whistle blower and founder of the project WikiLeaks was interviewed by John Pilger, Australian journalist for the media RT—Russia Today to discuss the US Elections and Hillary Cliton’s campaign. The video was published on YouTube, we could here notice a few irregularities or glitches on some parts of the interview. In another video called 5 Reasons Julian Assange Interview with John Pilger is FAKE ?#WhereisAssange #ProofOfLife, its author puts in parallel the softwares mentioned earlier and the visual anomalies—glitches we can notice in the interview. After 6 minutes and 54 seconds of conspiracy theories, where a badly recorded voice with a strong British accent, merged with an anxiogenic sound scape is trying to convince me that Assange’s interview is faked, I would almost buy it. The constant growth of our ability to doctor images or generate photorealistic and physically accurate 3D renders as phenomena is now closely linked with our progressive inability to believe any image at all.

“Democracy depends upon a certain idea of truth: not the babel of our impulses, but an independent reality visible to all citizens. This must be a goal; it can never fully be achieved. Authoritarianism raises when this goal is openly abandoned, and people conflate the truth with what they want to hear. Then begins a politics of spectacle, where the best liars with the biggest megaphones win.”

Timothy Snyder—Fascism is back, blame the internet. Washington Post, 2018

With the support of AI foundation, Supasorn Suwajanakorn is currently developing a browser extension called Reality Defender and promoted by the following words: “the first of the Guardian AI technologies that we are building on our responsibility platform. Guardian AI is built around the concept that everyone should have their own personal AI agents working with them through human-AI collaboration, initially for protection against the current risks of AI, and ultimately building value for individuals as the nature of society changes as a result of AI”. The software is designed to scan every image or video looking for artificially generated content helping you to identify “fakes” you could encounter on the web. This project partly came to life in order to counter the potential misuse of his own work, creating another cat-and-mouse game where algorithms used against themselves seem to be the only solution to solve the problem they create.

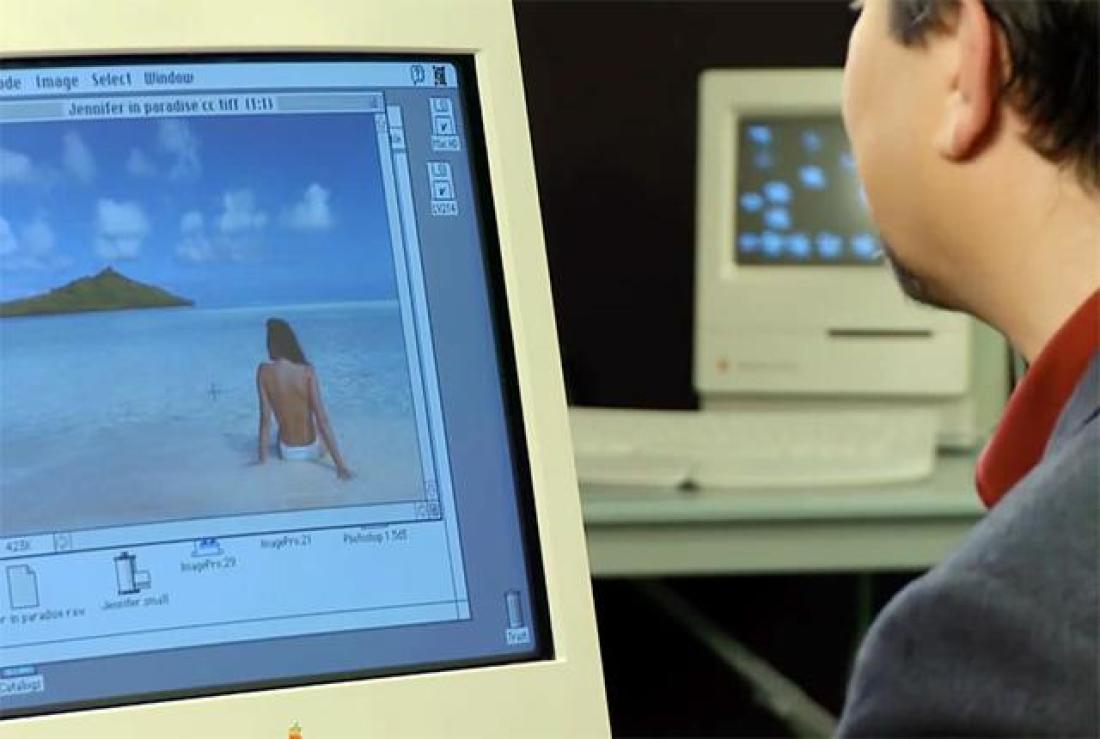

Turning her back to John Knoll and his camera over 30 years ago, topless, sitting on the beach of Bora Bora island, Jennifer could probably not imagine that the intimate moment she was sharing with her partner was a turning point for the future of image manipulation.

Setting the ground of nowadays confusion, that day, reality suddenly became more moldable. John Knoll and his future wife Jennifer were both employees of ILM—Industrial Light and Magic—one of the first and still one of the biggest companies specialised in special effects founded in 1975 for the upcoming production of the movie Star Wars directed by George Lucas. Assisted by his brother Thomas—doctorate in computer vision at the University of Michigan, John Knoll will design one of the first raster graphics editor. Digitised images were not so common back then and they needed an image to provide along with the software in order for their clients to play with it. John got scanned the only image he had in hand that day, the picture of his wife. The software was called photoshop and was bought by Adobe Systems in 1989. The picture of Jennifer in Bora Bora titled Jennifer in paradise, is an important artefact part of images and software history that disappeared for a while until Adobe published on their youtube channel the video Photoshop: The first Demo, in order celebrate the software anniversary. Shortly after this release, the dutch artist Constant Dullart, whose work and research evolves around the realm of internet and its culture decided to re-construct this emblematic picture out of screenshots extracted from the photoshop demo. He later used it as main material for his project as well titled Jennifer in Paradise, an attempt to re-create photoshop filters engraving a set of glass sheets. This project gave a new importance to an image many people forgot or never knew about, allowing it to find its place on the web.

We are now living in a time of uncertainty, a time where reality is apparently under attack. If it didn’t already collapse under the pressure of post-modernist academics* followed by the spectacle of politics and the technological development of image production techniques as the rise of computer generated imagery. A technological development that allowed us to perceive and shape new worlds, later trespassing the surface of the screen to inhabit ours as Hito Steyerl—filmmaker, artist and writer mainly focused on media and technologies, questioning the modes of production and the circulation of images—theorises in her essay Too much world: is the internet dead?. “Reality itself is post-produced and scripted” meaning that “the world can be understood but also altered by Its own tools.”

.

Chapter 2

Come Across

The digital image was already characterised by its mutability. Transformed, combined, altered, replacing old digits by new ones. A dynamic that can now be pushed further if we keep in mind that the photographic image or so called lens based capture could not come to life without the encounter and collaboration of three factors: An authorial intentionality, the world as a raw material and the camera as a form of technological mediation. For David Claerbout “the photographer and his subject coproduce one another”, they need each other to exist. With Computer Generated Imagery, this interdependency no longer exist since 3D softwares and their interfaces offer a space of total control where everything is premeditated and contingency doesn’t belong. A landscape of computer-generated object, bodies and environments in which each component, each detail is now a question that has to be answered by its author. A form of world making that Erika Balsom would see as a “seductive possibility of control over represented worlds as a distracting substitute of control over our own. Offering spectacle and entertainment while fulfilling a fantasy of governance, rationality and mastery in a time of crisis, uncertainty and environmental catastrophe.”

“The production of digital affective devices, which double as control mechanisms, is dependent on the decimation of every digitally under represented region of the world. As this new geography displaces the old, the digital subject becomes more visible than the physical subject. While the circulation of, luxury goods, liberal professionals, tourists, and financial flows occupies the whole field of visibility, refugees, seasonal workers, immigrants, and illegal aliens are rendered invisible”

Julieta Aranda and Ana Texeir Pinto—, Toaster, Task Rabbit, 2015

Meanwhile, cognitive processes as facial recognition and mirror neurones contributed through their exploitation to the establishment of a certain commercial aesthetic and imagery as we know it: drops of condensed water carefully slipping on the surface of a bottle or the shiny stainless steel curves of an iPhone X reflecting the light emitted by glowing geometric primitives. Multiplying as cancer cells do. Appealing images made out of polygons, progressively proliferate and colonise the media landscape. Designed to make you feel and evoke sensations, capitalising on our instincts.

Timur Si-Qin—defines stock photography as an Evolutionary Attractor: “The dimensions of our image solution space represent the many dimensions that make images appealing, attractive and marketable. These dimensions are complex and varied but certain domains and constraints are identifiable. Physiological, social, economic, practical and ultimately evolutionary factors determine the existence of common image solutions.” If we are referring to stock photography here, it’s for its archetypal value, efficiency and seductive potential, core of a hyper-capitalist visual language now powered by CGI technologies.

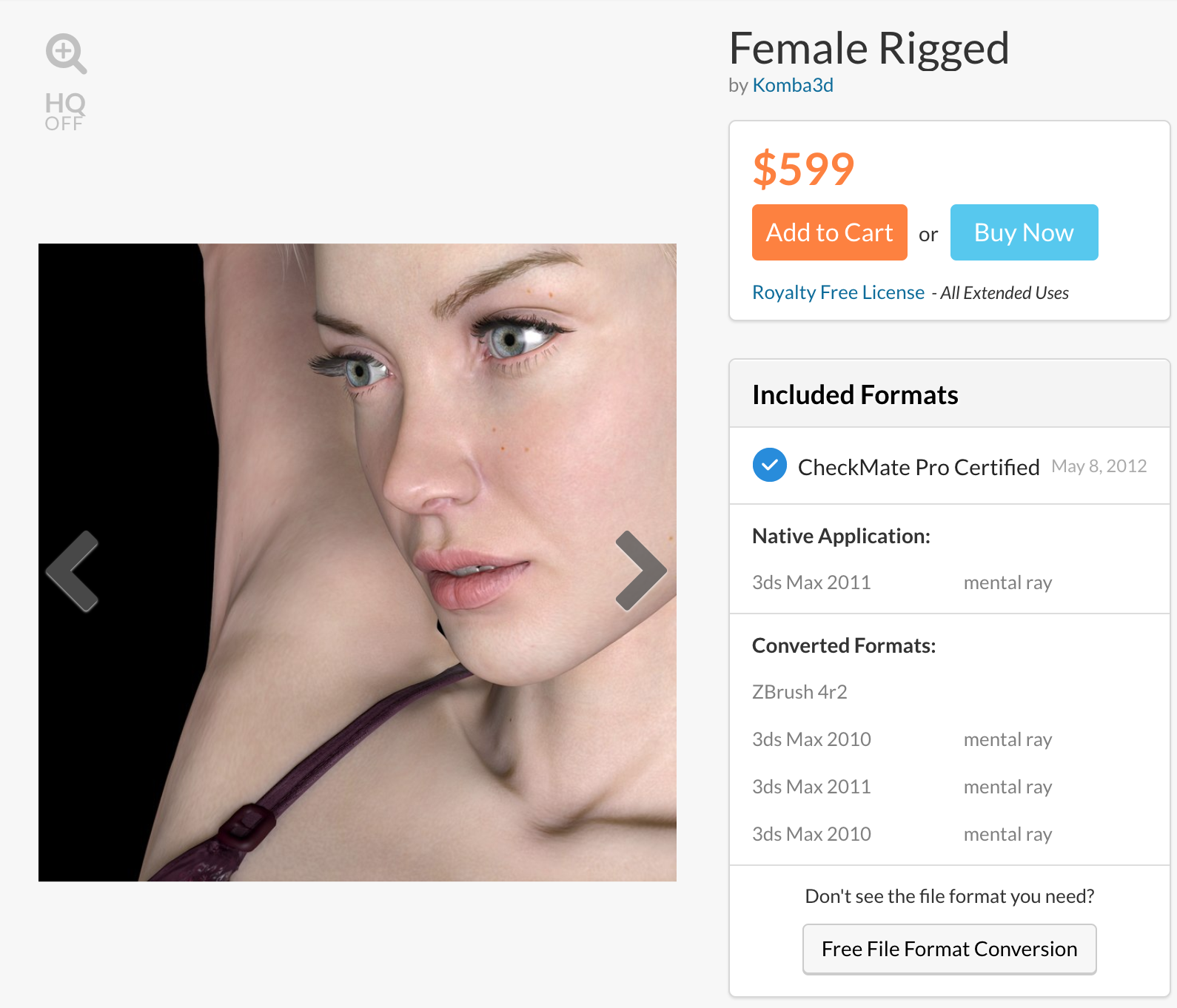

A language that the Rijksakademie alumni, also the founder of Auto-Italia South East*, Kate Cooper, makes use of in her project titled Rigged—title referring to the bone structure embedded in a 3D model making its animation possible—In this project, the narrative evolves around a stereotypical standard CG character with brown hair and blue eyes in her 30s, similar to many other models you can find while browsing on websites like TurboSquid—online market for 3D objects. For her: “It’s not about reclaiming the world or aesthetics of hyper-capitalism, but about occupying or invading it”. She is in that way looking for a potential “freedom in the things that are supposed to restrict us” while we can wonder if whether or not she is contributing to the dynamic she comments on when making use of photo-realistic renders of standardised assets and digital bodies in her practice

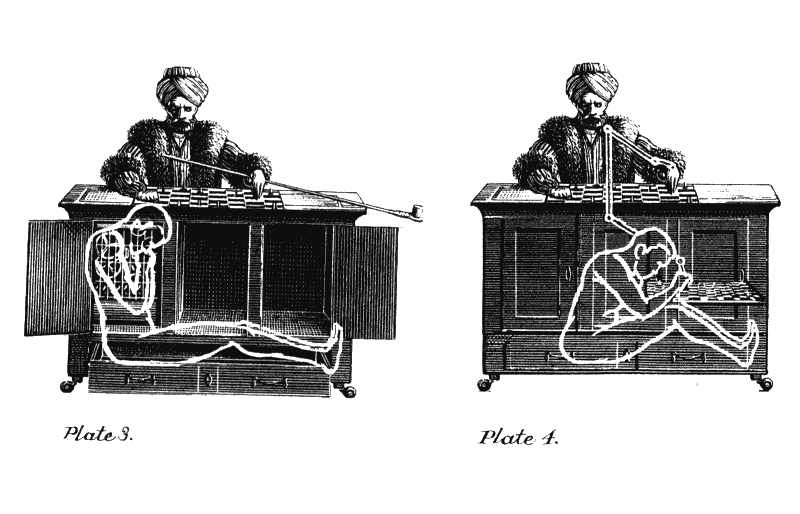

Here, every traces of the production is carefully erased, making these images fully opaque and turning them into an efficient ideology vector. Seeing the media of his time—theater as a tool for manipulation, the playwright Berthold Brecht theorised what he called the distancing effect in the first half of the 20th century. A new aesthetic and political program designed to preserve the viewer from any emotional involvement towards fictional subjects trough a set of methods as the disappearance of the 4th wall: imaginary border between the stage and the public. The idea was to deconstruct the medium, making its different layers and components visible to push the viewer to reflect critically towards the narratives that the play proposed. An approach later adopted by directors as Jean Luc Godard in the field of cinema and it now seems that its translation in the space of computer-generated imagery—breaking the engine would be an appropriate step towards a better understanding of its workings, agenda and function.

“Technologies of enchantment are strategies that exploit innate or derived psychological biases so as to enchant the other person and cause him/her to perceive social reality in a way that is favourable to social interest of the enchanter.”

Alfred Gell, Technology and magic.

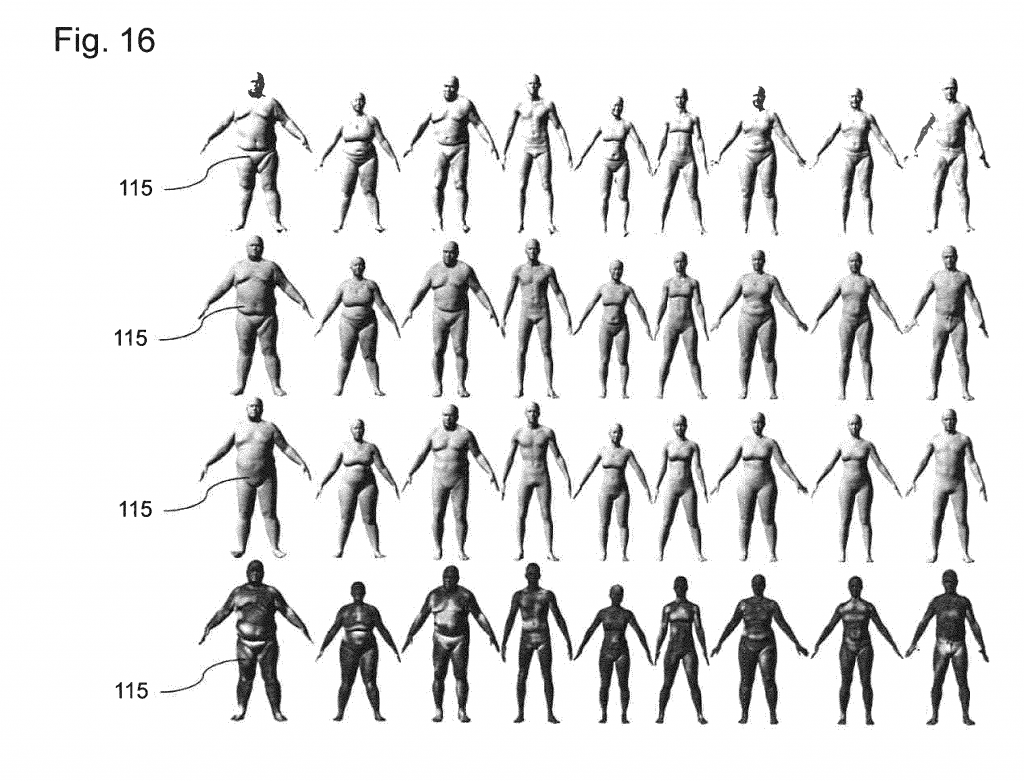

Simone C. Nicquille is a designer based in The Netherlands, under the name Technoflesh her work questions the notion of identity and the contemporary digitisations of the human body through CGI technologies. For Simone, design tools are “encoded with politics”, and if in a certain way 3D softwares promises to free us of physical realities, their architecture, settings and options needs to be questioned. In its attempt to virtually reproduce three-dimensional objects, complexity and imperfections are being erased, “unrecorded”, “marginalised”. Through her use of the software FUSE, a free Adobe product designed to make the design of 3D characters more accessible, she realised that its construction was based on anthropometric standards established by the project CAESAR* standing for Civilian American and European Surface Anthropometry Resources. Initiated by the U.S Air Force Research Laboratory between the 90s and early 2000s to create a data base of body measurements for the Air Force’s cockpit and uniform design. In her essay “What does the Graphical User Interface Want?” She begins by emphasising on the distortions born out of our attempt to map the world and how projections as Mercator contributed to shape our perception of reality. It appears here again that translations often comes with loss.

These bodies now have a life of themselves and digital Avatars as @Lil Miquela, a soft freckled skin 19 years old millennial based in Los Angeles, or @Shudu, production of the photographer Cameron-James Wilson and inspired by the Princess of South Africa Barbie Doll are starting to populate the web. Eric Davis, author of TechGnosis wrote twenty years ago that even though we know that technological artefacts are not alive we instinctively feel empathy towards them without being able to help it and these words resonates harder within a time of CG influencers baby boom. TechGnosis, subtitled Myth, Magic, and Mysticism in the Age of Information tries to draw or discover connections between the digital and spiritual when these two worlds nowadays seem unrelated.

@Lil Miquela or Miquela Sousa is the product of Brud— “trans-media studio that creates digital character driven story worlds”—that already raised around six millions dollars investment from Silicon Valley companies as Sequoia according to TechCrunch sources. She already released a bunch of tracks as You, Not Mine, Should You Be Alone and On My Own until heading spotify’s top 10. She have been interviewed by journalists from all over the world and shares her daily life on her instagram page with more than a million and five hundred thousands followers. She hangs out with other CG millennials as @Blawko22 or @BurmadaisBae but also with existing celebrities as Noah Gersh reinforcing this feeling telling us that representations can’t be contained anymore or considered as such. Fighting for LGBT rights and against racism, she also models for brands like Supreme, Vetements, Prada and took over Naomi Campbell’s position as the ambassador of the make-up artist Pat McGrath. @Lil Miquela and @Shudu themselves as well probably already generated considerable profits from their activities, profits meant to go straight in the pocket of their creators giving birth to a new CGI economy.

In the case of @Shudu, we can see her as the embodiment of a post-colonialist ideology and heritage. Another form of neo-orientalism, created piece by piece by a white man to fit his fantasy of what a “beautiful” black women looks or should look like using the Princess of South Africa Barbie Doll as a main reference is her construction. A digital radicalised body without a voice, created by and for the market and meant to be exploited by its creators reinforcing the feeling that traces and symptoms of centuries of colonial history remain in nowadays society and its structures.

Objects and bodies aren’t the only components of our reality to be affected by their own computer generated representations. Our cities and landscapes are also subject to a current mutation powered by 3D softwares standard assets. A relationship partly highlighted by Matthew Plummer Fernandez—artist and product designer—stating that “You can spot what sort of software applications have been used for a particular building” in an interview for Dezeen in 2014.

Through the concept of Gulf Futurism*, the artist Sophia Al Maria, originally from America and Qatar consider the region where she partly grew up as “a projection of our global future” while her practice is mainly based on architectural, cultural and artistic tendencies of the Arabian gulf. “A region that has been hyper-driven into a present made up of interior wastelands, municipal master plans and environmental collapse”.

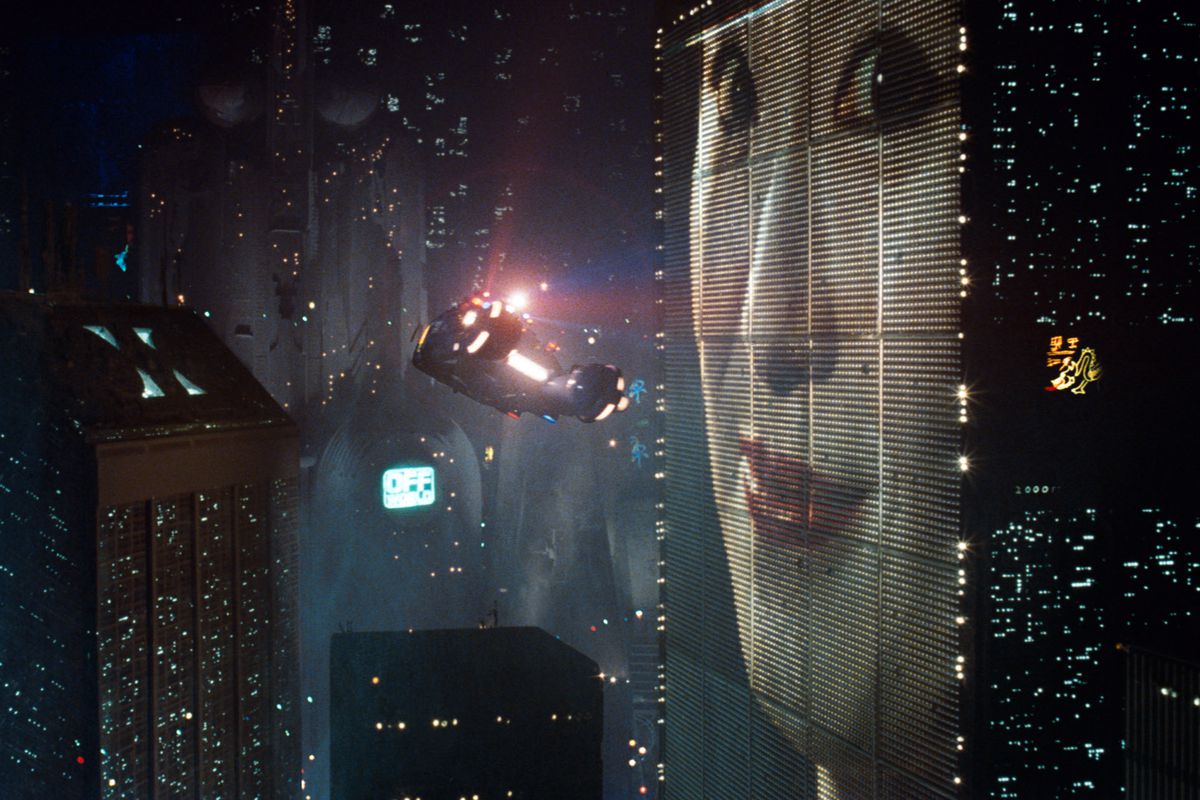

Built on a desert, the UAE and other regions of the Gulf are in constant expansion while the architecture of its skyline built over the last 20 years is inhabited by a certain idea of the future that finds its source in an imaginary built by western science fiction and its fetish for vertical cities. After visiting Dubaï in 2005 already, with Abdullah bin Hamad bin Isa Al Khalifa, son of the King of Bahrain in order to discuss potential future building projects, Syd Mead thoughts were that “The Middle East is a fantastic example of how reality is catching up with the future as the size, scope and vision of some of the region’s projects clearly show. I would like to be a part of the region’s horizon and help shape it for a better tomorrow”, he is a well-known product designer that has been largely involved in building the environments for movies like Blade Runner released in 1982. The growth and fast development of the area give birth to industrially produced future visions or “drag-and-drop utopias” as Tobias Revell* would call them referring to the production of 3D rendered architecture visualisation by companies as Crystal CG based in China drawing the outlines of a world to come with default tools and assets. In this 1 minute and 59 seconds video, you can admire “The new spirit of Saudi Arabia” designed by Adrian Smith and Gordon Gill and rendered by Visual Film House is 652 meters high with 176 floors above the ground, 59 elevators, 439 apartments and 2,205 Parking spaces spread over 243,886 m2. “Inspired by local desert plants”, the Jeddah Tower represents “new growth and high-performance technology fused into one powerful iconic from” its architect says. The kingdom tower unfortunately appears to be completely disconnected from its environment as other computer generated projections of future cities

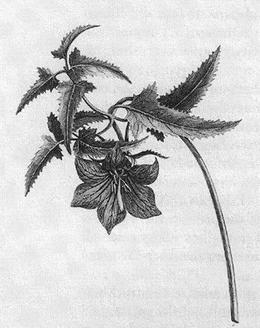

Reality is now produced by its own representation or simulation. Irregularities, multiplicity, accidents are considered as errors to be fixed through a process of reduction making echo to older demons. Our surroundings start taking the shape of an eighteen-century botanic atlas, where instead of an array of singular specimens, we are now surrounded by ideals. Simulations whispering that nature is messy, chaotic, that it needs to be perfected and computation seems to be the tool of its metamorphose.

A process of reduction that probably finds its origin a few centuries ago in a scientific obsession for objectivity when atlases were designed in order to register and make sense of the world, a process in which to decide if wether a picture is an “accurate rendering of nature”, the atlas maker must “first decide what nature is” says Lorraine Daston and Peter Galison in their book The Image of Objectivity looking into scientific depictions of nature in relation with such a concept.

“Reality will soon cease to be the standard by which to judge the imperfect image. Instead, the virtual image will become the standard by which to measure the imperfections of reality”

Harun Farocki

.

Chapter 3

Made of Metallic Dust

Fictional narratives, binary realities, singular visions of the future and other flawless bodies are also made of matter. Sand became precious, composed of quartz, the silicon dioxide or silica used to produce our hardware or the fiber optic cable through which this website has most probably been channeled.

Europium is a video essay directed by Lisa Rave, artist living and working in Berlin whose practice focuses on film. Co-written with Erik Blinderman the project underlines the ambiguous link between a corpus of hyper saturated photographs of “Nature” often displayed in commercials for TV screens—perfect subject to testify of the resolution manufacturers try to promote—and one of the main resources used to build and power the screen themselves. “Europium is not only the title and the subject of the film, it literally plays a role by making the images appear to the viewer as they are” she tells Stefanie Hessler in an interview for Vdrome. Esther Leslie, political Aesthetics professor at Birkbeck in London as well focuses on the physicality of these images questioning what they are made of, taking us through a history of the liquid crystals hidden under “any glass surface on which lively events take place”.

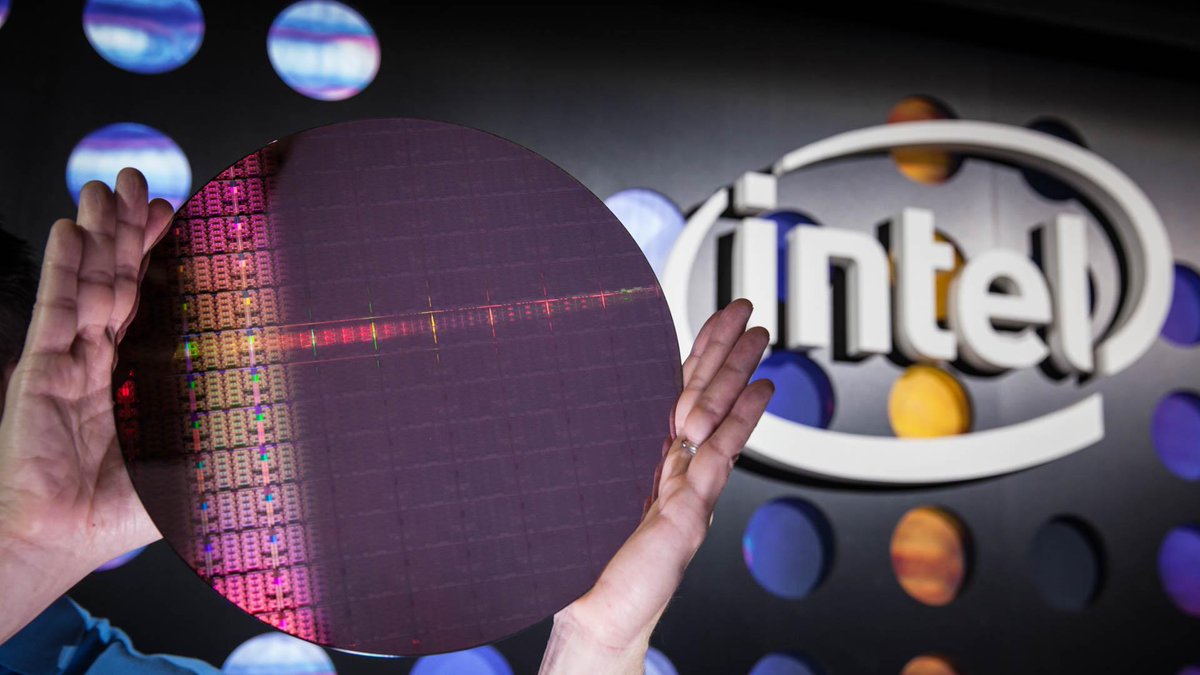

A process of mutation that we can easily put in parallel with the way CPU’s or GPU’s are produced and their actual purpose. Here, it isn’t about Europium* or liquid crystals anymore, but about Silicon, the second most abundant element on earth surface. Promoted for its semi-conductor quality—a balanced ability to conduct and resist electricity—that’s what computer chips have been made of until now giving its name to the well-known valley. We could here draw a similar path if considering how the industry or let’s say we, managed to build out of sand, out of clay, out of rocks, these images that haunt us while giving birth to scarred landscape and disrupted ecosystems through its mining process.

In the 50s, William Shockley who used to be an engineer for the tech company Bell Labs among others invented the transistor. He later left Bell Labs to build his own company in Mountain View, or Santa Clara Valley in California, near Stanford University and less than an hour away from San Francisco. Shockley has been the first to focus exclusively on silicon for the production of transistors, an industry now worth a few billion dollars. Subject to a complex production process, silicon goes through multiple phases to become pure at 99.99999999999%. From ingots later cut into wafers and sold to companies as Intel turning them into computer chips.

Robert Noyce—one of his employees, later founded the company Intel releasing their first computer chip in 1971 embedding 2250 transistors while their quantity have been increasing exponentially until now as the Moore’s law predicted. Theorised by Gordon Moore, one of the Intel founders—company leading the processing units or CPU industry—he predicted in 1965 that the quantity of transistors embedded in computer chips would double every eighteen months, meaning an approximate increase of fifty percent in performance per year with no effect on its production cost. The current 14 nanometer technology has been made available to consumers in 2014 and we are heading towards the mainstream production of the coming 10 nanometers technology allowing us to pack 100 million transistor per square millimeter according to the online magazine Spectrum.

While Intel keeps trying to maintain the Moore’s law alive with a potential 7 nanometers technology, NVIDIA, the company that launched the first graphic card in 1999 speculates on its end and promises a “1000X speed up of GPU computing by 2025”. When Intel’s high end CPU have reached a limit of 28 cores, Nvidia’s latest GPU’s includes 3,584 of them. Simulating geometry, lights, shadows, materials, physics and fluids requires millions to billions computations per second turning it into a pretty intensive task to handle and these are problems GPU’s are better at solving, their architecture being more appropriate with its thousands cores, able to perform millions parallel operations at the same time. That’s where lays the similarity between 3D rendering and deep learning.

Tools that used to be produced in order to power images are apparently now starting to power reality itself. Nvidia is currently leading a team of more than 200 researchers on Artificial intelligence. “A Visionary, a healer, a creator and so much more. I am AI, brought to life by NVIDIA” That’s what a soft and feminine voice over tells us while watching the promotional video available on their website. It appears that GPU’s are the perfect tool to power the new wave of automation to come.

On our journey driven by our desire to achieve a more accurate, better, an ideal representation of reality, navigating through the media landscape became a complex task. Since computer-generated images is on its way to replace the photographic and cinematic image, we find ourselves endlessly questioning images—visual media, their modes of production and their agenda while wondering what we are looking at. Slowly loosing ground with reality, we can no longer believe what we see in a new reality made of polygons and algorithms.

These representations imply a registration of the world that as well, does not come without consequences. Glossy, flawless and generic projections implies a process of reduction where a part of the complexity, the multitude that compose our reality stays un-rendered. Computer generated imagery offered us a space of control in which we have not succeeded yet to go beyond its standard assets and off-the-shelf solutions.

These different phenomenons find their origin in another loss, the significant impact of the silicon mining industry. Earth materials, core of our hardware, are necessary resources to power the images we are referring to.

We can observe an interesting dynamic where reality is now produced by its own computer generated representation, they shattered and crossed the surface of the screen to take place among us. It suddenly feels like shaping new worlds might maybe cost us our own. Automation now powered by the same tools and hardware seem to be the next shift to come, a step further in a production of new realities. In other terms, the screen is broken.

After a primary scientific understating of how the human eye perceives visual phenomenons, established by Ibn Al Haytam in his book Kitab Al-Manazir or Book of Optics written in 1028, artists tried to represent them accurately with the invention of linear perspective during the Renaissance. Joseph Nicéphore Niépce later achieved the capture of these phenomenons through the lens of the first camera during the 19th century, creating the illusion of a three dimensional space within which things appeared to exist the same way our eyes see them. From pigments to light, from light to 1’s and 0’s. Computer Generated imagery technologies now allow us to simulate reality, displayed by a constantly increasing combination of pixels and frames per second on the surface of our screens.

The uncanny valley—concept developed by Masahiro Mori, Japanese professor in robotics in his research on human emotional responses to non-human entities—is now far behind as Alan Warburton, would argue in his movie Good Bye The Uncanny Valley. The resolution of our screens as well goes far beyond what our eyes can perceive but we keep on developing these technologies feeding the needs of the entertainment industry for more realism. André Bazin—French cinema theorist of the 19th century and author of the book What Is Cinema? would divide that so-called realism in two categories based on opposite tendencies observed in the 20th century cinema. The first one would take shape as a significant expression of the world in its essence when the second has as only purpose to deceive, fool the eye and the mind with illusory appearances.

“You can feel this very strange force that since the beginning, CGI are in competition with the cinematographic and cinematic images. Just like socialism wanted to defeat capitalism, they want to defeat these images and are probably on the verge to defeat them right now” stated the late filmmaker Harun Farocki who passed in July 2014. Computer Generated Images, constructed and designed to serve likeness or illusion as a main function progressively proliferate, a phenomenon observable in the realm of politics, advertisement or in the entertainment industry for the past 30 years. According to Farocki, we are facing the rise of computer-generated imagery, on its way to become the dominant image since current technologies allows us to simulate every single piece of our environment. Observing a feed-back loop pattern between representations and their subject through history, we can only wonder what realities or effect these technologies will produce in their attempt to achieve a more accurate visual representations of our world?

Not only providing us new tools for image production, digital technologies transformed our understanding of reality while changing our relationship to fabrication and circulation of images channeled by social networks, online video platforms and mainstream media as Erika Balsom: critic based in London, working on cinema, art, and their intersection reminds us during her keynote presentation: Rehabilitating observation—Lens-Based Capture and the 'Collapse’ of Reality' in Amsterdam, for the 2018 edition of the festival Sonic Acts, emphasising on the importance of documentary practices in a time of planetary crisis—Post truth era, where holding back on reality seem to be an emergency.

“For Hollywood, it is special effects. For covert operators in the US Military and intelligence agencies, it is a weapon of the future. Once you can take any kind of information and reduce it into ones and zeros, you can do some pretty interesting things.”

Daniel T.Khuel—When Seeing and Hearing Isn’t Believing. Washington Post, 1999

Kim Laughton and David O’reilly are two CG Artists respectively based in Shanghaï and Los Angeles. Through the online blogging platform Tumblr, they collaborated on a project titled #HyperrealCG: A collection of banal images as still life, landscapes, portraits and other objects, always followed by captions providing more information about their authors and the softwares used for their production. Its purpose was to showcase “the world’s most impressive and technical hyper-real 3D art”. The images published on the website were in fact photographs collected while browsing on the web or taken by Laughton and O’reilly themselves. Their enterprise was meant as a comment on fellow artist driven by the desire of achieving photo-realism in their work, considering this parameter a sign of artistic quality. The Huffington post published a click bait article titled “You Won’t Believe These Images Aren’t Photographs” and later apologised re-titling to “You Won’t Believe These Images Aren’t Photographs, Because They Are Photographs”. #HyperrealCG started spreading over the web. Eventually the two artists revealed their intentions through their twitter account. Making a good joke but “not trying to fool anyone”.

We are experiencing an unprecedented crisis regarding the trust citizens nowadays have for professional news media since alternative facts, post-truth and fake news became part of our daily language partly promoted by politics like Donald Trump since his election in 2016. Computer generated imagery and artificial intelligence technologies are getting better at creating convincing illusions. Meanwhile, its use by hoaxers and propagandists to generate fake or doctored content is reinforcing the doubts we can have regarding the authenticity of visual media.

In 2017, Eliot Higgins, the funder of Bellingcat—cloud based organisation leading international investigations—discovered that still images extracted from AC-130 Gunship Simulator: Special Ops Squadron’s promotional video had been shared by the Russian defence ministry on twitter. The release of the Images was intended as a piece of evidence, proving the collaboration of the United States with the Islamic State of Iraq and the Levent—ISIL. The post was complemented with a statement: “The US are actually covering the ISIS combat units to recover their combat capabilities, redeploy, and use them to promote the American interests in the Middle East.” A Low resolution, black and white view from above, overlayed by a crosshair in the screen’s middle where the player opens fire on its targets. The Interface of the game vulgarly replicates the aesthetics of military drone monitors, enough to tell us a little more about our lack of understanding when it comes to identify what we are looking at.

Computer generated imagery seems to be perfectly suited for weaponisation in an age of untrustworthy images where deep fake videos are already over the internet, you can ask Nicolas cage. Artificial intelligence recently allowed us to put words in the mouth of anybody. Far from Josh Kline’s Hope and Change face substitutions also made through the use of machine learning a few years earlier, in Synthesizing Obama, published in 2017 by Supasorn Suwajanakorn—researcher from the University of Washington’s Graphics and Imaging Laboratory—one image was enough to simulate a three dimensional facial model even though more footages were necessary to reproduce the different imperfections and wrinkles that each facial expression would generate. To finish with, “a simple averaging method” would sharpen the colours and textures allowing us to get a fully controllable facial model we can now drive using any video as an input. Stanford University already developed another program with the same purpose, in this case the results were rendered in real time using a webcam only as input. Similar open source algorithms are now available under MIT—Licence, free of use, on platforms like Github. However, generating images through the use of machine learning is not so much of an easy task either and doesn’t happen over one click on a button. A basic understanding of programming at least is still required and the hardware should follow. Unfortunately, the accuracy of the results produced by these technologies are only a part of the problem just as much as its costs or technicalities. Its very existence is a new source of doubts in a time we could already qualify as uncertain. A climate of ambient and collective paranoia reinforced by conspiracy theories adepts of all kind that may not forever stayed confined to the darkest areas of the web.

After that Julian Assange, whistle blower and founder of the project WikiLeaks was interviewed by John Pilger, Australian journalist for the media RT—Russia Today to discuss the US Elections and Hillary Cliton’s campaign. The video was published on YouTube, we could here notice a few irregularities or glitches on some parts of the interview. In another video called 5 Reasons Julian Assange Interview with John Pilger is FAKE ?#WhereisAssange #ProofOfLife, its author puts in parallel the softwares mentioned earlier and the visual anomalies—glitches we can notice in the interview. After 6 minutes and 54 seconds of conspiracy theories, where a badly recorded voice with a strong British accent, merged with an anxiogenic sound scape is trying to convince me that Assange’s interview is faked, I would almost buy it. The constant growth of our ability to doctor images or generate photorealistic and physically accurate 3D renders as phenomena is now closely linked with our progressive inability to believe any image at all.

“Democracy depends upon a certain idea of truth: not the babel of our impulses, but an independent reality visible to all citizens. This must be a goal; it can never fully be achieved. Authoritarianism raises when this goal is openly abandoned, and people conflate the truth with what they want to hear. Then begins a politics of spectacle, where the best liars with the biggest megaphones win.”

Timothy Snyder—Fascism is back, blame the internet. Washington Post, 2018

Turning her back to John Knoll and his camera over 30 years ago, topless, sitting on the beach of Bora Bora island, Jennifer could probably not imagine that the intimate moment she was sharing with her partner was a turning point for the future of image manipulation. Setting the ground of nowadays confusion, that day, reality suddenly became more moldable. John Knoll and his future wife Jennifer were both employees of ILM—Industrial Light and Magic—one of the first and still one of the biggest companies specialised in special effects founded in 1975 for the upcoming production of the movie Star Wars directed by George Lucas. Assisted by his brother Thomas—doctorate in computer vision at the University of Michigan, John Knoll will design one of the first raster graphics editor. Digitised images were not so common back then and they needed an image to provide along with the software in order for their clients to play with it. John got scanned the only image he had in hand that day, the picture of his wife. The software was called photoshop and was bought by Adobe Systems in 1989. The picture of Jennifer in Bora Bora titled Jennifer in paradise, is an important artefact part of images and software history that disappeared for a while until Adobe published on their youtube channel the video Photoshop: The first Demo, in order celebrate the software anniversary. Shortly after this release, the dutch artist Constant Dullart, whose work and research evolves around the realm of internet and its culture decided to re-construct this emblematic picture out of screenshots extracted from the photoshop demo. He later used it as main material for his project as well titled Jennifer in Paradise, an attempt to re-create photoshop filters engraving a set of glass sheets. This project gave a new importance to an image many people forgot or never knew about, allowing it to find its place on the web.

We are now living in a time of uncertainty, a time where reality is apparently under attack. If it didn’t already collapse under the pressure of post-modernist academics* followed by the spectacle of politics and the technological development of image production techniques as the rise of computer generated imagery. A technological development that allowed us to perceive and shape new worlds, later trespassing the surface of the screen to inhabit ours as Hito Steyerl—filmmaker, artist and writer mainly focused on media and technologies, questioning the modes of production and the circulation of images—theorises in her essay Too much world: is the internet dead?. “Reality itself is post-produced and scripted” meaning that “the world can be understood but also altered by Its own tools.”

Chapter 2

Come Across

“The production of digital affective devices, which double as control mechanisms, is dependent on the decimation of every digitally under represented region of the world. As this new geography displaces the old, the digital subject becomes more visible than the physical subject. While the circulation of, luxury goods, liberal professionals, tourists, and financial flows occupies the whole field of visibility, refugees, seasonal workers, immigrants, and illegal aliens are rendered invisible”

Julieta Aranda and Ana Texeir Pinto—, Toaster, Task Rabbit, 2015

Timur Si-Qin—defines stock photography as an Evolutionary Attractor: “The dimensions of our image solution space represent the many dimensions that make images appealing, attractive and marketable. These dimensions are complex and varied but certain domains and constraints are identifiable. Physiological, social, economic, practical and ultimately evolutionary factors determine the existence of common image solutions.” If we are referring to stock photography here, it’s for its archetypal value, efficiency and seductive potential, core of a hyper-capitalist visual language now powered by CGI technologies.

A language that the Rijksakademie alumni, also the founder of Auto-Italia South East*, Kate Cooper, makes use of in her project titled Rigged—title referring to the bone structure embedded in a 3D model making its animation possible—In this project, the narrative evolves around a stereotypical standard CG character with brown hair and blue eyes in her 30s, similar to many other models you can find while browsing on websites like TurboSquid—online market for 3D objects. For her: “It’s not about reclaiming the world or aesthetics of hyper-capitalism, but about occupying or invading it”. She is in that way looking for a potential “freedom in the things that are supposed to restrict us” while we can wonder if whether or not she is contributing to the dynamic she comments on when making use of photo-realistic renders of standardised assets and digital bodies in her practice

Here, every traces of the production is carefully erased, making these images fully opaque and turning them into an efficient ideology vector. Seeing the media of his time—theater as a tool for manipulation, the playwright Berthold Brecht theorised what he called the distancing effect in the first half of the 20th century. A new aesthetic and political program designed to preserve the viewer from any emotional involvement towards fictional subjects trough a set of methods as the disappearance of the 4th wall: imaginary border between the stage and the public. The idea was to deconstruct the medium, making its different layers and components visible to push the viewer to reflect critically towards the narratives that the play proposed. An approach later adopted by directors as Jean Luc Godard in the field of cinema and it now seems that its translation in the space of computer-generated imagery—breaking the engine would be an appropriate step towards a better understanding of its workings, agenda and function.

“Technologies of enchantment are strategies that exploit innate or derived psychological biases so as to enchant the other person and cause him/her to perceive social reality in a way that is favourable to social interest of the enchanter.”

Alfred Gell, Technology and magic.

These bodies now have a life of themselves and digital Avatars as @Lil Miquela, a soft freckled skin 19 years old millennial based in Los Angeles, or @Shudu, production of the photographer Cameron-James Wilson and inspired by the Princess of South Africa Barbie Doll are starting to populate the web. Eric Davis, author of TechGnosis wrote twenty years ago that even though we know that technological artefacts are not alive we instinctively feel empathy towards them without being able to help it and these words resonates harder within a time of CG influencers baby boom. TechGnosis, subtitled Myth, Magic, and Mysticism in the Age of Information tries to draw or discover connections between the digital and spiritual when these two worlds nowadays seem unrelated.

@Lil Miquela or Miquela Sousa is the product of Brud— “trans-media studio that creates digital character driven story worlds”—that already raised around six millions dollars investment from Silicon Valley companies as Sequoia according to TechCrunch sources. She already released a bunch of tracks as You, Not Mine, Should You Be Alone and On My Own until heading spotify’s top 10. She have been interviewed by journalists from all over the world and shares her daily life on her instagram page with more than a million and five hundred thousands followers. She hangs out with other CG millennials as @Blawko22 or @BurmadaisBae but also with existing celebrities as Noah Gersh reinforcing this feeling telling us that representations can’t be contained anymore or considered as such. Fighting for LGBT rights and against racism, she also models for brands like Supreme, Vetements, Prada and took over Naomi Campbell’s position as the ambassador of the make-up artist Pat McGrath. @Lil Miquela and @Shudu themselves as well probably already generated considerable profits from their activities, profits meant to go straight in the pocket of their creators giving birth to a new CGI economy.

In the case of @Shudu, we can see her as the embodiment of a post-colonialist ideology and heritage. Another form of neo-orientalism, created piece by piece by a white man to fit his fantasy of what a “beautiful” black women looks or should look like using the Princess of South Africa Barbie Doll as a main reference is her construction. A digital radicalised body without a voice, created by and for the market and meant to be exploited by its creators reinforcing the feeling that traces and symptoms of centuries of colonial history remain in nowadays society and its structures.

Objects and bodies aren’t the only components of our reality to be affected by their own computer generated representations. Our cities and landscapes are also subject to a current mutation powered by 3D softwares standard assets. A relationship partly highlighted by Matthew Plummer Fernandez—artist and product designer—stating that “You can spot what sort of software applications have been used for a particular building” in an interview for Dezeen in 2014.

Through the concept of Gulf Futurism*, the artist Sophia Al Maria, originally from America and Qatar consider the region where she partly grew up as “a projection of our global future” while her practice is mainly based on architectural, cultural and artistic tendencies of the Arabian gulf. “A region that has been hyper-driven into a present made up of interior wastelands, municipal master plans and environmental collapse”.

Built on a desert, the UAE and other regions of the Gulf are in constant expansion while the architecture of its skyline built over the last 20 years is inhabited by a certain idea of the future that finds its source in an imaginary built by western science fiction and its fetish for vertical cities. After visiting Dubaï in 2005 already, with Abdullah bin Hamad bin Isa Al Khalifa, son of the King of Bahrain in order to discuss potential future building projects, Syd Mead thoughts were that “The Middle East is a fantastic example of how reality is catching up with the future as the size, scope and vision of some of the region’s projects clearly show. I would like to be a part of the region’s horizon and help shape it for a better tomorrow”, he is a well-known product designer that has been largely involved in building the environments for movies like Blade Runner released in 1982. The growth and fast development of the area give birth to industrially produced future visions or “drag-and-drop utopias” as Tobias Revell* would call them referring to the production of 3D rendered architecture visualisation by companies as Crystal CG based in China drawing the outlines of a world to come with default tools and assets. In this 1 minute and 59 seconds video, you can admire “The new spirit of Saudi Arabia” designed by Adrian Smith and Gordon Gill and rendered by Visual Film House is 652 meters high with 176 floors above the ground, 59 elevators, 439 apartments and 2,205 Parking spaces spread over 243,886 m2. “Inspired by local desert plants”, the Jeddah Tower represents “new growth and high-performance technology fused into one powerful iconic from” its architect says. The kingdom tower unfortunately appears to be completely disconnected from its environment as other computer generated projections of future cities

Reality is now produced by its own representation or simulation. Irregularities, multiplicity, accidents are considered as errors to be fixed through a process of reduction making echo to older demons. Our surroundings start taking the shape of an eighteen-century botanic atlas, where instead of an array of singular specimens, we are now surrounded by ideals. Simulations whispering that nature is messy, chaotic, that it needs to be perfected and computation seems to be the tool of its metamorphose.

A process of reduction that probably finds its origin a few centuries ago in a scientific obsession for objectivity when atlases were designed in order to register and make sense of the world, a process in which to decide if wether a picture is an “accurate rendering of nature”, the atlas maker must “first decide what nature is” says Lorraine Daston and Peter Galison in their book The Image of Objectivity looking into scientific depictions of nature in relation with such a concept.

“Reality will soon cease to be the standard by which to judge the imperfect image. Instead, the virtual image will become the standard by which to measure the imperfections of reality”

Harun Farocki

Chapter 3

Made of Metallic Dust

Europium is a video essay directed by Lisa Rave, artist living and working in Berlin whose practice focuses on film. Co-written with Erik Blinderman the project underlines the ambiguous link between a corpus of hyper saturated photographs of “Nature” often displayed in commercials for TV screens—perfect subject to testify of the resolution manufacturers try to promote—and one of the main resources used to build and power the screen themselves. “Europium is not only the title and the subject of the film, it literally plays a role by making the images appear to the viewer as they are” she tells Stefanie Hessler in an interview for Vdrome. Esther Leslie, political Aesthetics professor at Birkbeck in London as well focuses on the physicality of these images questioning what they are made of, taking us through a history of the liquid crystals hidden under “any glass surface on which lively events take place”.

A process of mutation that we can easily put in parallel with the way CPU’s or GPU’s are produced and their actual purpose. Here, it isn’t about Europium* or liquid crystals anymore, but about Silicon, the second most abundant element on earth surface. Promoted for its semi-conductor quality—a balanced ability to conduct and resist electricity—that’s what computer chips have been made of until now giving its name to the well-known valley. We could here draw a similar path if considering how the industry or let’s say we, managed to build out of sand, out of clay, out of rocks, these images that haunt us while giving birth to scarred landscape and disrupted ecosystems through its mining process.

In the 50s, William Shockley who used to be an engineer for the tech company Bell Labs among others invented the transistor. He later left Bell Labs to build his own company in Mountain View, or Santa Clara Valley in California, near Stanford University and less than an hour away from San Francisco. Shockley has been the first to focus exclusively on silicon for the production of transistors, an industry now worth a few billion dollars. Subject to a complex production process, silicon goes through multiple phases to become pure at 99.99999999999%. From ingots later cut into wafers and sold to companies as Intel turning them into computer chips.

Robert Noyce—one of his employees, later founded the company Intel releasing their first computer chip in 1971 embedding 2250 transistors while their quantity have been increasing exponentially until now as the Moore’s law predicted. Theorised by Gordon Moore, one of the Intel founders—company leading the processing units or CPU industry—he predicted in 1965 that the quantity of transistors embedded in computer chips would double every eighteen months, meaning an approximate increase of fifty percent in performance per year with no effect on its production cost. The current 14 nanometer technology has been made available to consumers in 2014 and we are heading towards the mainstream production of the coming 10 nanometers technology allowing us to pack 100 million transistor per square millimeter according to the online magazine Spectrum.

While Intel keeps trying to maintain the Moore’s law alive with a potential 7 nanometers technology, NVIDIA, the company that launched the first graphic card in 1999 speculates on its end and promises a “1000X speed up of GPU computing by 2025”. When Intel’s high end CPU have reached a limit of 28 cores, Nvidia’s latest GPU’s includes 3,584 of them. Simulating geometry, lights, shadows, materials, physics and fluids requires millions to billions computations per second turning it into a pretty intensive task to handle and these are problems GPU’s are better at solving, their architecture being more appropriate with its thousands cores, able to perform millions parallel operations at the same time. That’s where lays the similarity between 3D rendering and deep learning.

Tools that used to be produced in order to power images are apparently now starting to power reality itself. Nvidia is currently leading a team of more than 200 researchers on Artificial intelligence. “A Visionary, a healer, a creator and so much more. I am AI, brought to life by NVIDIA” That’s what a soft and feminine voice over tells us while watching the promotional video available on their website. It appears that GPU’s are the perfect tool to power the new wave of automation to come.

On our journey driven by our desire to achieve a more accurate, better, an ideal representation of reality, navigating through the media landscape became a complex task. Since computer-generated images is on its way to replace the photographic and cinematic image, we find ourselves endlessly questioning images—visual media, their modes of production and their agenda while wondering what we are looking at. Slowly loosing ground with reality, we can no longer believe what we see in a new reality made of polygons and algorithms.

These representations imply a registration of the world that as well, does not come without consequences. Glossy, flawless and generic projections implies a process of reduction where a part of the complexity, the multitude that compose our reality stays un-rendered. Computer generated imagery offered us a space of control in which we have not succeeded yet to go beyond its standard assets and off-the-shelf solutions.

These different phenomenons find their origin in another loss, the significant impact of the silicon mining industry. Earth materials, core of our hardware, are necessary resources to power the images we are referring to.

We can observe an interesting dynamic where reality is now produced by its own computer generated representation, they shattered and crossed the surface of the screen to take place among us. It suddenly feels like shaping new worlds might maybe cost us our own. Automation now powered by the same tools and hardware seem to be the next shift to come, a step further in a production of new realities. In other terms, the screen is broken.

Rehabilitating Observation: Lens-Based Capture and the ‘Collapse’ of Reality— Erika Bolsom, Sonic Acts

Resisting Reduction: A Manifesto—Joichi Ito, MITpress

The Image of Objectivity—Lorraine Daston and Peter Galison

By the Light of the Moon: Turing Recreates Scene of Iconic Lunar Landing—Brian Caulfield, Nvidiablog

Kate Cooper: hypercapitalism and the digital body—Jeppe Ugelvig, Dis

Lil Miquela: the AI Star on the Cover of Highsnobiety Issue 16

Facism is back, blame the internet—Timothy Snyder, Washington Post

CGI & Photography—Alan Warburton

Deep Learning Solves the Uncanny Valley Problem—Carlos E.perez, Medium

Lil Miquela, Shudu Gram... Qui sont ces mannequins 2.0 ?—Vogue Magazine

Stock Photography as Evolutionnary atractor — Timur Siqin, Dismagazine

The Silence of the Lens—David Clearbout, Eflux

Unstable Surfaces: Slippery Meanings of Shiny Things—Nic Maffei and Tom Fisher

The makers of the virtual influencer, Lil Miquela, snag real money from Silicon Valley—Jonathan Shieber, TechCrunch

How Moore’s Law Now Favours Nvidia Over Intel—Ken Kam, Forbes

When Seeing and Hearing Isn’t Believing—Daniel T.Khuel, Washington Post

Gulf Futurism is Killing People—Nathalie Olah, Vice

5 Reasons Julian Assange Interview with John Pilger is FAKE? #WhereisAssange

#ProofOfLife—Samad Tjaž How Nvidia’s RTX Real-Time Ray Tracing Works—David Cardinal, ExtremeTech

Ikea's catalog now uses 75 percent computer generated imagery—Jacob Kastrenakes, The Verge

Subjects of the American Moon: From Studio as Reality to Reality as Studio— Francois Bucher, Eflux

Too Much World: Is the Internet Dead?—Hito Steyerl, Eflux

From automated cars to AI, Nvidia powers the future with hardcore gaming hardware—John Martendal, DigitalTrends

The Era of Fake Video Begins—Franklin Foer, The Atlantic

Deep Fakes and The Future of Propaganda—Tyler Elliot Bettilyon, Medium

NVIDIA GeForce RTX - Official Launch Event

TechGnosis—Erik Davis

Seizing the Means of Rendering—Tobias Revell

What does the graphical User interface want ? — Simine C.Niquille

Turk, Toaster, Task Rabbit—Julieta Aranda and Ana Texeir Pinto, Eflux

The technological Body—Simone C.Niquille, Impact Festival

Jennifer in Paradise: The story of the first photoshopped image—Gordon Comstock, Guardian

The ultra pure super secret sand that makes your phone possible—Vince Beiser, Telegraphe

Russia Uses video game pictures to claim US helped ISIL — Alec Luhn, Telegraphe

Elemental Machines—Esther Leslie, Transmediale

Goodbye Uncanny Valley—Alan Warburton

Unrendering Utopia—Mapping Festival Geneve

Rendering truth—Ars Electronica 2018