CROSS MODAL DESIGN

CROSS

MODAL

DESIGN

ABSTRACT

ABSTRACT

ABSTRACT

My main goal of writing this thesis is to find out how vision can possibly make room for other senses. We live in a visually oriented culture. Especially for the people, including myself, who pursue the job of a designer. For us people, whose practice consists of almost only visual purposes, it is crucial we question the importance of that particular sense organ we use the most. Are our eyes a necessity or just an advantage? Can I still be a designer when I become blind? Graphic design is usually described as visual communication. However, in our daily lives, we use vision in combination with hearing in order to interact with one another. Therefore I will focus mainly on vision and hearing. This profession puts a lot of visual conditions on top of our already existing pictorial obsession. Seeing is part of a collective unconsciousness we all share. The eye is at the top of our sensory hierarchy. What has caused this hierarchical shift and when did this happen? Is the notion of visual domination true or is it merely an assumption? In this text I will explore different techniques and tools to circumvent the way we use our senses. What happens when one of our sense organs stops functioning and what are the right substitutions for it. Can limiting our focus on vision make us see better?

My main goal of writing this thesis is to find out how vision can possibly make room for other senses. We live in a visually oriented culture. Especially for the people—including myself—who pursue the job of a designer. For us people, whose practice consists of almost only visual purposes, it is crucial we question the importance of that particular sense organ we use the most. Are our eyes a necessity or just an advantage? Can I still be a designer when I become blind? Graphic design is usually described as visual communication. However, in our daily lives, we use vision in combination with hearing in order to interact with one another. Therefore I will focus mainly on vision and hearing. This profession puts a lot of visual conditions on top of our already existing pictorial obsession. Seeing is part of a collective unconsciousness we all share. The eye is at the top of our sensory hierarchy. What has caused this hierarchical shift and when did this happen? Is the notion of visual domination true or is it merely an assumption? In this text I will explore different techniques and tools to circumvent the way we use our senses. What happens when one of our sense organs stops functioning and what are the right substitutions for it. Can limiting our focus on vision make us see better?

SEE

SEE

See

We see to believe, we see to understand and we see to justify. We believe our seeing is proof positive. 01 We are relying on our sense of vision to capture an action or mood. The act of seeing is widely used in language, sayings and symbolism: Let's see; I see; I see what you mean; I see what you did there; I see where you're coming from; First see, then believe; See for yourself; You see? See you! The eye is the symbol of protection and life, the window to the soul, the omnipresent spirit of God, watchful over those who serve. It is a passageway into a new dimension, the light of the body. It confesses the secrets of the heart. It represents intelligence, light, vigilance, moral conscience and truth. 02

We see to believe, we see to understand and we see to justify. We believe our seeing is proof positive. 01 We are relying on our sense of vision to capture an action or mood. The act of seeing is widely used in language, sayings and symbolism: Let's see; I see; I see what you mean; I see what you did there; I see where you're coming from; First see, then believe; See for yourself; You see? See you! The eye is the symbol of protection and life, the window to the soul, the omnipresent spirit of God, watchful over those who serve. It is a passageway into a new dimension, the light of the body. It confesses the secrets of the heart. It represents intelligence, light, vigilance, moral conscience and truth. 02

The eye is by far the most symbolized sense of the human body. fig. 01 Sight is by many the most praised and highest valued sense. According to various sources, eighty to ninety percent of all the information that is transmitted to the brain is visual. Sixty percent of the people are visual learners and fifty percent of the brain is dedicated to the visual function. 03 Our beloved marbles can make rapid movements. This is called a saccade fig. 02 and they can occur at a maximum peak rate of about 900 degrees per second—which is about 0.68 kph. That may seem slow, but imagine them rolling down the streets.

The eye is by far the most symbolized sense of the human body. fig. 01 Sight is by many the most praised and highest valued sense. According to various sources, eighty to ninety percent of all the information that is transmitted to the brain is visual. Sixty percent of the people are visual learners and fifty percent of the brain is dedicated to the visual function. 03 Our beloved marbles can make rapid movements. This is called a saccade and they can occur at a maximum peak rate of about 900 degrees per second—which is about 0.68 kph. That may seem slow, but imagine them rolling down the streets.

figure 01

All Seeing Eyes

figure 02

automatic saccadic eye movement detection

In 2014 a study by Professor Mary C. Potter and colleagues at MIT (Massachusetts Institute of Technology, Cambridge) revealed that the brain can process and interpret images presented to the eyes for as little as 13 milliseconds. 04 And it is believed that visuals are processed 60.000 times faster than text. Which is why one could argue that ‘a picture is worth a thousand words’. We love this sense by heart, most of us will choose it over other senses and would never trade it.

In 2014 a study by Professor Mary C. Potter and colleagues at MIT (Massachusetts Institute of Technology, Cambridge) revealed that the brain can process and interpret images presented to the eyes for as little as 13 milliseconds. 04 And it is believed that visuals are processed 60.000 times faster than text. Which is why one could argue that ‘a picture is worth a thousand words’. We love this sense by heart, most of us will choose it over other senses and would never trade it.

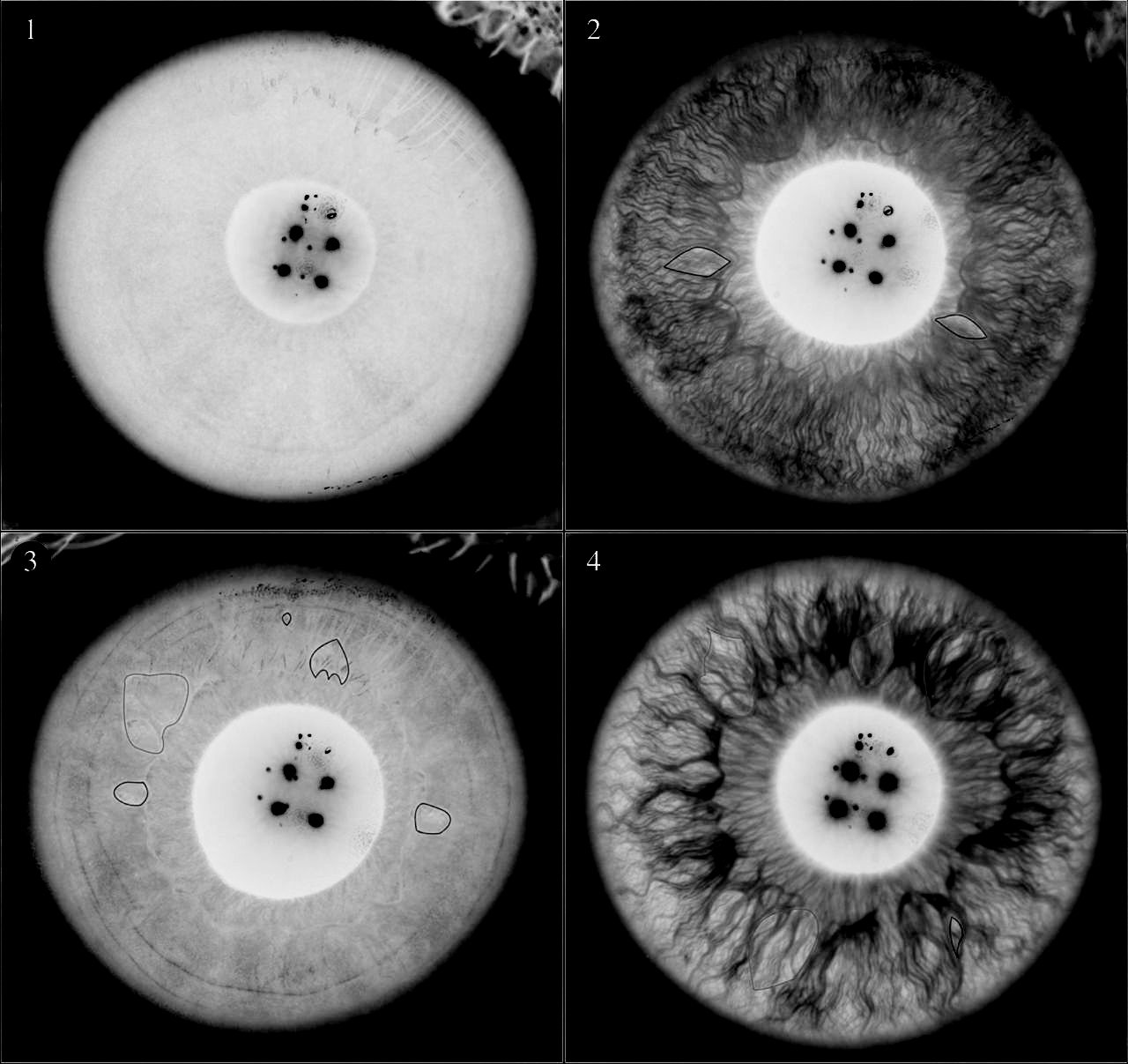

The eye is unique, one of a kind. Every single iris is different—like a fingerprint—and each iris can indicate different individual characteristics. This has been discovered by scientists from the Orebro University in Sweden. fig. 03 After a study of 428 individuals, their findings showed those with densely-packed crypts (threads that radiate from the pupil) are 'gentle, tender-minded, warm, trustful and show positive emotions' In comparison, those with more furrows in the iris were more 'neurotic, impulsive and likely to give way to cravings.’ 05 So, in a way, it is actually true that the eyes are ‘the windows to the soul.’

The eye is unique, one of a kind. Every single iris is different—like a fingerprint—and each iris can indicate different individual characteristics. This has been discovered by scientists from the Orebro University in Sweden. fig. 03 After a study of 428 individuals, their findings showed those with densely-packed crypts (threads that radiate from the pupil) are 'gentle, tender-minded, warm, trustful and show positive emotions' In comparison, those with more furrows in the iris were more 'neurotic, impulsive and likely to give way to cravings.’ 05 So, in a way, it is actually true that the eyes are ‘the windows to the soul.’

figure 03

Overview of constitutional Rayid Iris structures

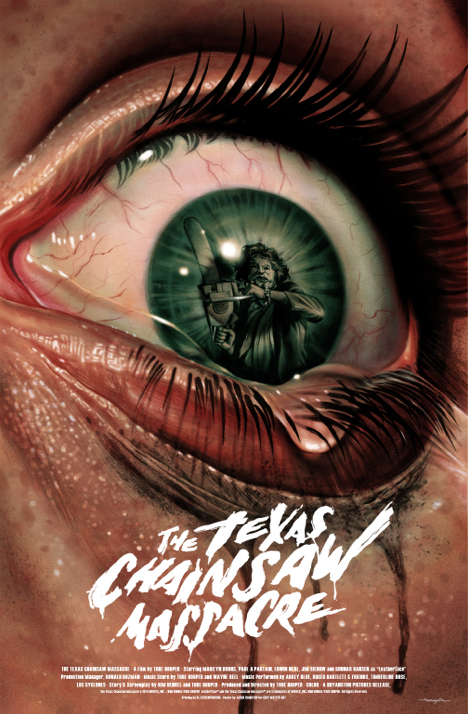

Although this discovery or scientific proof dates back from 2007, designers seemed to have always used the eye to attract attention. Think of the numerous movie posters that have a gigantic staring eye in the centre. They usually try to capture the whole story within one image of the eye. It can show the vulnerableness of a victim in a horror movie, or the evil monster that is up to no good. An eye can show a lot of emotions. Which is exactly why it is so widely used in advertising. Eyes lure us into the poster or image. fig. 04

Although this discovery or scientific proof dates back from 2007, designers seemed to have always used the eye to attract attention. Think of the numerous movie posters that have a gigantic staring eye in the centre. They usually try to capture the whole story within one image of the eye. It can show the vulnerableness of a victim in a horror movie, or the evil monster that is up to no good. An eye can show a lot of emotions. Which is exactly why it is so widely used in advertising. Eyes lure us into the poster or image. fig. 04

figure 04

Texas Chain Massacre, movie poster

We think of our eyes as tools that show us all the wisdom and truth around us. They are ‘wise seers’ that generate a clear vision and a true interpretation of how we perceive the world. However, all the eye really does is gather light. Seeing, as we think of it, does not happen in our eyes but in our brain. We are seeing life in fast-forward and our visual brain predicts future events based on past experience. This becomes clear when one is exposed to (optical)illusions. ‘If the eye’s powers are appreciated by science, so too are its limitations’. 06 For instance the 'Vanishing Ball Illusion’. 07 A very basic trick where a magician throws up a ball a couple of times. On the final throw, he pretends to throw it up while he is actually holding it back. Our mind is predicting future scenarios every second and this time it predicted how the ball would go up—so it makes the illusion of it vanishing in mid air. fig. 05

We think of our eyes as tools that show us all the wisdom and truth around us. They are ‘wise seers’ that generate a clear vision and a true interpretation of how we perceive the world. However, all the eye really does is gather light. Seeing, as we think of it, does not happen in our eyes but in our brain. We are seeing life in fast-forward and our visual brain predicts future events based on past experience. This becomes clear when one is exposed to (optical)illusions. ‘If the eye’s powers are appreciated by science, so too are its limitations’. 06 For instance the 'Vanishing Ball Illusion’. 07 A very basic trick where a magician throws up a ball a couple of times. On the final throw, he pretends to throw it up while he is actually holding it back. Our mind is predicting future scenarios every second and this time it predicted how the ball would go up—so it makes the illusion of it vanishing in mid air.

In fact, every single visual experience could be an illusion: Humans can see only a fraction of the light waves of the total spectrum; the human eye has a blind spot where the optic nerve connects with the retina; human vision is limited by its capacity to focus on objects only a certain distance from the eye… 08

In fact, every single visual experience could be an illusion: Humans can see only a fraction of the light waves of the total spectrum; the human eye has a blind spot where the optic nerve connects with the retina; human vision is limited by its capacity to focus on objects only a certain distance from the eye...08

While a lot of species use other senses as their main guidance through life, most people rely on their eyes, and even more so, they are obsessed with it. Our obsession with imagery has caused a global visual overload. 09 We have a greed for imagery, and demand them together with everything. Text, music and radio-shows are accompanied by images. Throughout the twentieth century, imagery has increasingly infiltrated the urban landscape. Roadsigns and billboards have become a large element of our perception of the world, it would feel empty without them. Even outside of the city we can’t escape from visual bombardments. fig. 06 We almost don’t notice them anymore which is why advertisers are trying so hard to stand out from others and attract attention. They have to shout at us in order for us to see or hear them. An eye-catcher from a few years ago might now be just a blur to us. fig. 07

While a lot of species use other senses as their main guidance through life, most people rely on their eyes, and even more so, they are obsessed with it. Our obsession with imagery has caused a global visual overload. 09 We have a greed for imagery, and demand them together with everything. Text, music and radio-shows are accompanied by images. Throughout the twentieth century, imagery has increasingly infiltrated the urban landscape. Roadsigns and billboards have become a large element of our perception of the world, it would feel empty without them. Even outside of the city we can’t escape from visual bombardments. We almost don’t notice them anymore which is why advertisers are trying so hard to stand out from others and attract attention. They have to shout at us in order for us to see or hear them. An eye-catcher from a few years ago might now be just a blur to us.

figure 06

Billboards across an Egyptian highway

figure 07

Screaming headlines

The eye has not always been at the top. Not even second—but used to be third place in the hierarchy of the senses. In ‘The Problem of Unbelief in the Sixteenth Century’, Lucien Febvre argues that: ‘The sixteenth century did not see first: it heard and smelled, it sniffed the air and caught sounds. It was only later, as the seventeenth century was approaching, that it seriously and actively became engaged in geometry, focusing attention on the world of forms. It was then that vision was unleashed in the world of science as it was in the world of physical sensations, and the world of beauty as well’. 10 The hierarchy of the senses might be just a subjective concept that is different everywhere. In this text I am mainly focussing on Western culture but if we look across other cultures, we will see all kind of hierarchies and even different understanding of senses. For example, the Javanese ‘have five senses (seeing, hearing, talking, smelling and feeling), which do not exactly coincide with our five’. 11 The Cashinahua people of Peru fig. 08 hold that there are six senses – or rather, percipient centers: the skin, the hands, the ears, the genitals, the liver and the eyes. 12 Such historical and cross-cultural variation gives the lie to any notion of our fivefold division of the sensorium being natural and indisputable. As ideas about the senses can differ at so fundamental a level, one should not be surprised to find that substantial differences also exist as to the relative separation or interpenetration of the senses. 13

The eye has not always been at the top. Not even second—but used to be third place in the hierarchy of the senses. In ‘The Problem of Unbelief in the Sixteenth Century’, Lucien Febvre argues that: ‘The sixteenth century did not see first: it heard and smelled, it sniffed the air and caught sounds. It was only later, as the seventeenth century was approaching, that it seriously and actively became engaged in geometry, focusing attention on the world of forms. It was then that vision was unleashed in the world of science as it was in the world of physical sensations, and the world of beauty as well’. 10 The hierarchy of the senses might be just a subjective concept that is different everywhere. In this text I am mainly focussing on Western culture but if we look across other cultures, we will see all kind of hierarchies and even different understanding of senses. For example, the Javanese ‘have five senses (seeing, hearing, talking, smelling and feeling), which do not exactly coincide with our five’.11 The Cashinahua people of Peru hold that there are six senses – or rather, percipient centers: the skin, the hands, the ears, the genitals, the liver and the eyes. 12 Such historical and cross-cultural variation gives the lie to any notion of our fivefold division of the sensorium being natural and indisputable. As ideas about the senses can differ at so fundamental a level, one should not be surprised to find that substantial differences also exist as to the relative separation or interpenetration of the senses. 13

figure 08

Cashinahua Tribe, Peru

But coming back to Lucien Febvre’s argument, in the West, we started focussing on form, composition and aesthetics. Which caused good and bad results. We might now have better guidance in finding ways and we can see beauty in daily objects, but it also withholds experience, which may seem invisible to us, but affects us drastically; It affects us physiologically and psychologically. It affects our health, behavior, productivity and so our accuracy. Noise levels in hospitals and healthcare facilities have doubled since 1972, 14 which increased substantially dispensing errors due to concentration lost and stress from the noise. Modern architecture focusses on form and functionality, but takes acoustic experience for granted. Julian Treasure, public speaker and CEO of The Sound Agency in U.K., wonders: ‘Do architects have ears?’ Classrooms and offices are designed too open and causes them to be too noisy. For instance, less than half of the pupils can hear the teacher without loosing focus caused by a big vibration time. A solution could be subdividers but they break up the spaces into tiny, uncomfortable sizes. 15 On the other hand, too quiet isn’t good either. Silence creates an unpleasant atmosphere where sudden sounds can become too striking since they are the only detectable source at that time.

But coming back to Lucien Febvre’s argument, in the West, we started focussing on form, composition and aesthetics. Which caused good and bad results. We might now have better guidance in finding ways and we can see beauty in daily objects, but it also withholds experience, which may seem invisible to us, but affects us drastically; It affects us physiologically and psychologically. It affects our health, behavior, productivity and so our accuracy. Noise levels in hospitals and healthcare facilities have doubled since 1972, 14 which increased substantially dispensing errors due to concentration lost and stress from the noise. Modern architecture focusses on form and functionality, but takes acoustic experience for granted. Julian Treasure, public speaker and CEO of The Sound Agency in U.K., wonders: ‘Do architects have ears?’ Classrooms and offices are designed too open and causes them to be too noisy. For instance, less than half of the pupils can hear the teacher without loosing focus caused by a big vibration time. A solution could be subdividers but they break up the spaces into tiny, uncomfortable sizes. 15 On the other hand, too quiet isn’t good either. Silence creates an unpleasant atmosphere where sudden sounds can become too striking since they are the only detectable source at that time.

HEAR

HEAR

HEAR

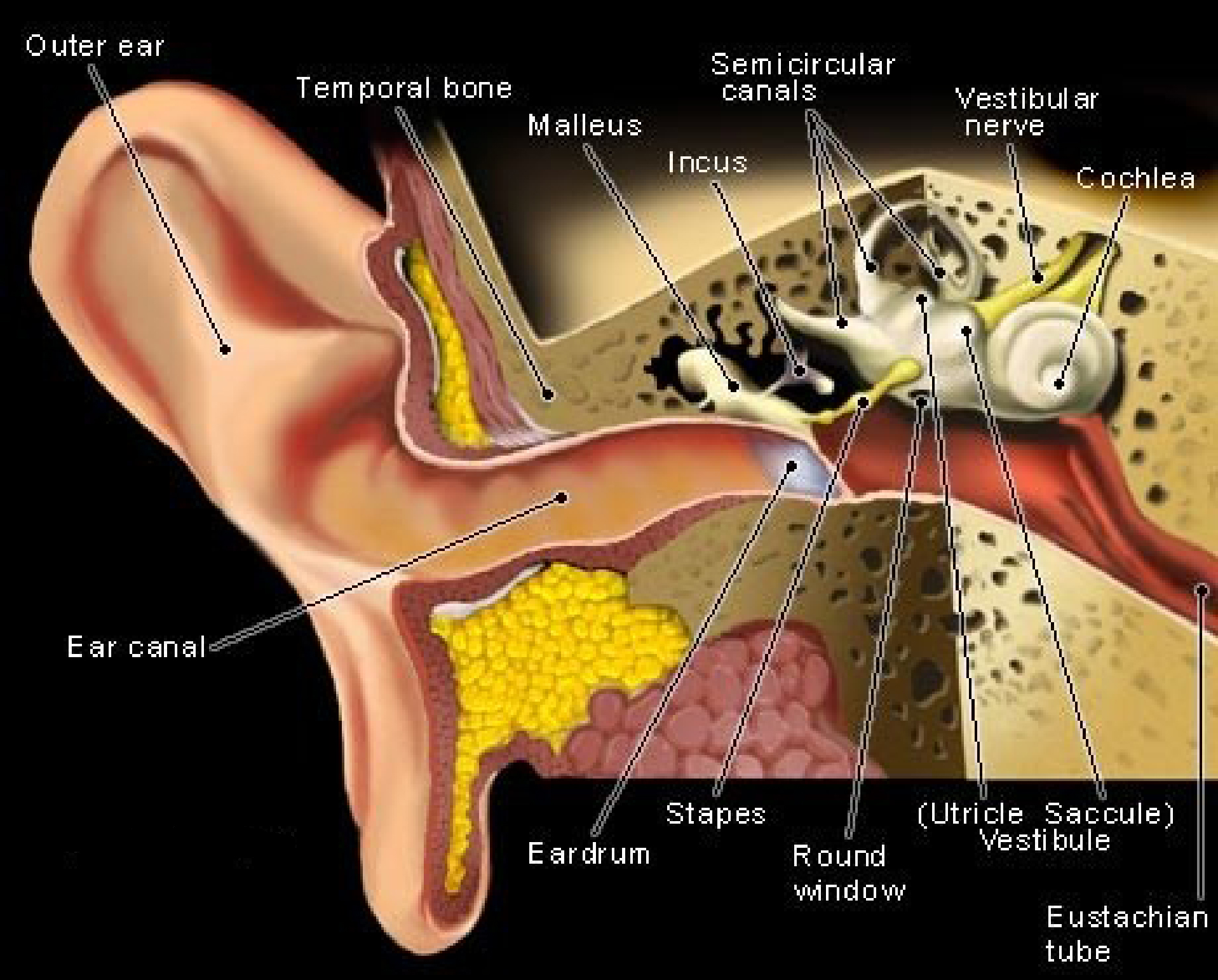

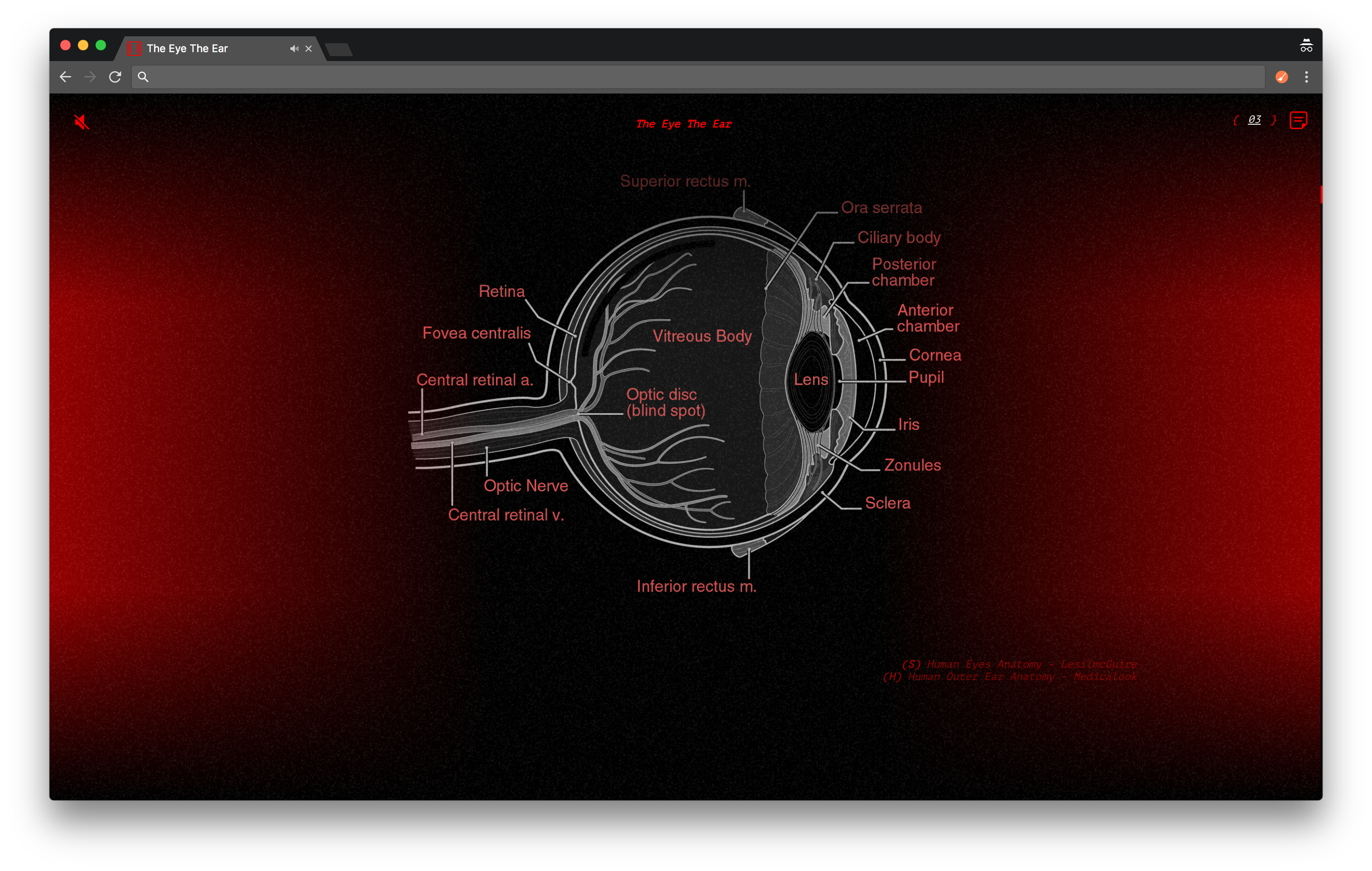

The ear is much more complex than a shell shaped organ that collects and detects sound waves. It also plays a major role in our sense of balance. The ear consists of three main parts; the outer ear, the middle ear and the inner ear. fig. 09 The outer ear is composed of the pinna, or ear lobe, and the external auditory canal. They transmit sound-waves towards the ear drum which allows it to vibrate. The pinna protects the ear drum from damaging. The middle ear is an air filled space located in the temporal bone of the skull. The middle ear contains three bones; the malleus, incus, and stapes. They are attached like a chain to the tympanic membrane (which is a thin, cone-shaped membrane that separates the external ear from the middle ear) and convert sound waves that vibrate the membrane into mechanical vibrations of the three bones. The stapes are connected to the inner ear. The inner ear however has two important functions; hearing and balance. It consists of small tubes that are filled with fluid. This is where the cells responsible for hearing are located (the hairy cells of Corti). We hear by filtering sound from the environment into the outer ear and causing the tympanic membrane to vibrate. Those sound waves vibrations are transferred into mechanical vibrations of the ossicles. (or auditory ossicles, which are one of the smallest bones in the human body). The ear has three semicircular canals (three tiny, fluid-filled tubes in the inner ear). They are responsible for your balance. When your head moves around, the liquid inside those canals wave around and move the tiny hairs that line each canal. When these hairs move they provoke nerve impulses and transmit that information to the brain. 16

The ear is much more complex than a shell shaped organ that collects and detects sound waves. It also plays a major role in our sense of balance. The ear consists of three main parts; the outer ear, the middle ear and the inner ear. fig. 09 The outer ear is composed of the pinna, or ear lobe, and the external auditory canal. They transmit sound-waves towards the ear drum which allows it to vibrate. The pinna protects the ear drum from damaging. The middle ear is an air filled space located in the temporal bone of the skull. The middle ear contains three bones; the malleus, incus, and stapes. They are attached like a chain to the tympanic membrane (which is a thin, cone-shaped membrane that separates the external ear from the middle ear) and convert sound waves that vibrate the membrane into mechanical vibrations of the three bones. The stapes are connected to the inner ear. The inner ear however has two important functions; hearing and balance. It consists of small tubes that are filled with fluid. This is where the cells responsible for hearing are located (the hairy cells of Corti). We hear by filtering sound from the environment into the outer ear and causing the tympanic membrane to vibrate. Those sound waves vibrations are transferred into mechanical vibrations of the ossicles. (or auditory ossicles, which are one of the smallest bones in the human body). The ear has three semicircular canals (three tiny, fluid-filled tubes in the inner ear). They are responsible for your balance. When your head moves around, the liquid inside those canals wave around and move the tiny hairs that line each canal. When these hairs move they provoke nerve impulses and transmit that information to the brain. 16

figure 09

Anatomical Structures of the Human Ear

The ear is much less symbolized than the eye. Although a very important symbol is that the ear is associated with the whorled shell and the sun, and the shell which is a symbol of the vulva which means there is a close connection between the ear and birth itself. 17 The ears are already developed inside the mothers womb. A baby's ears are mostly developed by about the 20th week of pregnancy, and babies start responding to sounds around the 24th week. A baby’s eyelids remain closed for about 28 weeks (retina’s need more time to develop). So there is quite a time gap between those two developments. 18 The other difference is that babies can hear sounds from outside the womb. The noise from outside travels through the mothers body to the womb. Although sounds from inside the womb are louder than outside, loud noises can cause stress to the mother’s baby. Irregular and fast heartbeats can also be heard and felt.

The ear is much less symbolized than the eye. Although a very important symbol is that the ear is associated with the whorled shell and the sun, and the shell which is a symbol of the vulva which means there is a close connection between the ear and birth itself. 17 The ears are already developed inside the mothers womb. A baby's ears are mostly developed by about the 20th week of pregnancy, and babies start responding to sounds around the 24th week. A baby’s eyelids remain closed for about 28 weeks (retina’s need more time to develop). So there is quite a time gap between those two developments. 18 The other difference is that babies can hear sounds from outside the womb. The noise from outside travels through the mothers body to the womb. Although sounds from inside the womb are louder than outside, loud noises can cause stress to the mother’s baby. Irregular and fast heartbeats can also be heard and felt.

There seems to be a connection between the time differences of those two developments and the different ways we use our brain. In terms of reaction time and the process of how we perceive, our brain consists of three parts: The old brain (hindbrain), the middle brain and the new brain (neocortex). They all perform very different tasks and even their cells are structured differently. They are independent but also work together as they pass information to on another. This is not only needed to be able to make quick decisions in terms of danger, but also able to make complex problem solving. Imagine driving in your car and all of a sudden a kid on a bicycle appears in front of you. In that case you need to response rapidly without thinking about the distance, the color of the bike and what time it is. It could be a matter of life and death. This is what the old brain is capable of, it constantly scans for any signs of danger, sources and mating. Since the beginning of our existence, these three are crucial for survival. All incoming sensory data is collected by the old brain. Everything you hear, see, smell, taste and feel. Because the old brain works at a subconscious level, most people will not notice it. When the old brain can’t make a decision it passes it on to the new brain. The new brain is much slower so this only happens when absolutely necessary. The midbrain is responsible for analyzing social behavior, facial expressions, gestures, tone of voice, etc. Our new and middle brain can change throughout our lives, whereas the old brain remains mostly the same as generations before. 19 Hearing is very important to the old brain. Loud noises often equal danger. Especially in an environment where the eyes cannot fully function, such as a dark forest. Any sound source could be a possible danger. Or during our sleep, the eyes are too busy following images of our dreams. But the ears are always alert and could send signals to the brain at any time and wake us up in seconds. Try pronouncing someones name while that person is asleep. People will more likely wake up if one would call their name then if one would say something else.

There seems to be a connection between the time differences of those two developments and the different ways we use our brain. In terms of reaction time and the process of how we perceive, our brain consists of three parts: The old brain (hindbrain), the middle brain and the new brain (neocortex). They all perform very different tasks and even their cells are structured differently. They are independent but also work together as they pass information to on another. This is not only needed to be able to make quick decisions in terms of danger, but also able to make complex problem solving. Imagine driving in your car and all of a sudden a kid on a bicycle appears in front of you. In that case you need to response rapidly without thinking about the distance, the color of the bike and what time it is. It could be a matter of life and death. This is what the old brain is capable of, it constantly scans for any signs of danger, sources and mating. Since the beginning of our existence, these three are crucial for survival. All incoming sensory data is collected by the old brain. Everything you hear, see, smell, taste and feel. Because the old brain works at a subconscious level, most people will not notice it. When the old brain can’t make a decision it passes it on to the new brain. The new brain is much slower so this only happens when absolutely necessary. The midbrain is responsible for analyzing social behavior, facial expressions, gestures, tone of voice, etc. Our new and middle brain can change throughout our lives, whereas the old brain remains mostly the same as generations before. 19 Hearing is very important to the old brain. Loud noises often equal danger. Especially in an environment where the eyes cannot fully function, such as a dark forest. Any sound source could be a possible danger. Or during our sleep, the eyes are too busy following images of our dreams. But the ears are always alert and could send signals to the brain at any time and wake us up in seconds. Try pronouncing someones name while that person is asleep. People will more likely wake up if one would call their name then if one would say something else.

Our ears—along with our other senses—never reflect the environment as it truly is. Everyone has a different Umwelt. This definition comes from the German Umwelten meaning environment or surrounding. We can only perceive our own self-centered world, our own Umwelt. As direct as hearing may seem—it also causes illusions—auditory illusions. They have more to do with our long term memories. We often recognize sounds we heard before. Think we hear the voice of someone familiar or hear similar sounding words that we interpret as our name.

Our ears—along with our other senses—never reflect the environment as it truly is. Everyone has a different Umwelt. This definition comes from the German Umwelten meaning environment or surrounding. We can only perceive our own self-centered world, our own Umwelt. As direct as hearing may seem—it also causes illusions—auditory illusions. They have more to do with our long term memories. We often recognize sounds we heard before. Think we hear the voice of someone familiar or hear similar sounding words that we interpret as our name.

SEEING WHAT WE HEAR

HEARING WHAT WE SEE

SEEING WHAT WE HEAR…

SEEING WHAT WE HEAR

HEARING WHAT WE SEE

Seeing and hearing are quite different from each other. However, they are both the fundamental senses when it comes to communication—preferably looking and listening as they both happen consciously. This is why I mainly focus on those two. We use our ears to listen to the person you are having a conversation with and of course our own voice to guide our speech. Our eyes scan the persons emotions, facial expressions, hand gestures and read the lips of our conversational partner. Our senses often work together in harmony and provide us social engagements. But they can also trick each other. The eyes and ears are always working together to combine visual and oral speech and together create our speech perception when someone is talking to you. However, what our eyes are reading from the lips, may differ from what that person is actually saying. The brain can’t help but to integrate visual speech into what we hear. This phenomenon is called the McGurk Effect. The illusion occurs when the auditory component of one sound is paigrey with the visual component of another sound, leading to the perception of a third sound. 20 A great example is a movie-clip of a person pronouncing the word “bah”—then replace the video of that person pronouncing the word “fah”—but keep the “bah” sound. Your brain will correct the audio into sounding like “fah” to create an even speech perception.

Seeing and hearing are quite different from each other. However, they are both the fundamental senses when it comes to communication—preferably looking and listening as they both happen consciously. This is why I mainly focus on those two. We use our ears to listen to the person you are having a conversation with and of course our own voice to guide our speech. Our eyes scan the persons emotions, facial expressions, hand gestures and read the lips of our conversational partner. Our senses often work together in harmony and provide us social engagements. But they can also trick each other. The eyes and ears are always working together to combine visual and oral speech and together create our speech perception when someone is talking to you. However, what our eyes are reading from the lips, may differ from what that person is actually saying. The brain can’t help but to integrate visual speech into what we hear. This phenomenon is called the McGurk Effect. The illusion occurs when the auditory component of one sound is paigrey with the visual component of another sound, leading to the perception of a third sound. 20 A great example is a movie-clip of a person pronouncing the word “bah”—then replace the video of that person pronouncing the word “fah”—but keep the “bah” sound. Your brain will correct the audio into sounding like “fah” to create an even speech perception.

figure 10

explanation video of the McGurk Effect

On the other hand, sound has a lot of power when it comes to changing perception. It can empower us, inspire us, anger us, motivate us, and change our perspective on the world. Not only can sound achieve all those things, it can effect us on a deeper, more subconscious anatomical level. It can decrease or increase our pulse, change our blood pressure and even greyuce levels of anxiety and depression. Our heart reacts to sound-waves—in particular perpetual rhythms—which is why we feel relaxed at a 60 BPM (beats per minute) and feel uplifted anything higher than 100 BPM. When an innocent kids cartoon is played and the original audio is replaced with horror sounds, it changes the whole experience of that movie. For instance a Winnie the Pooh clip with horror soundscapes changes the whole meaning and we immediately start to interpret it differently. A scene where the animated teddybear cannot sleep first looks funny and innocent, but when the sounds change it turns into a nightmare. Or the opposite, even by turning off the sound of a horror movie, it becomes less scary. Replacing it with happy music, it removes the scary factor all the way and will seem like a comedy. Not only can sound change the mood of a movie, it also is capable of altering our vision in our dreams. In some cases forges a completely new scene. During a sleep experiment, a woman was monitogrey during her deep sleep. At first without any interference. After some time, they woke her up and asked her what she dreamed. It was a common description of mixed experienced she had the past few days. Then she went to sleep again. While she was asleep, the researchers turned on a soft soundscape consisting of seagull sounds and waves splashing onto a shore. Again they woke her up and she had a very relaxing and delighted dream. The third experiment she was sleeping while sirens and people screaming was played in the background. The sound was at maximum volume, at the edge of not waking her up. They woke her up and she told the researchers she was dreaming of being trapped in a fire and full of anxiety.

On the other hand, sound has a lot of power when it comes to changing perception. It can empower us, inspire us, anger us, motivate us, and change our perspective on the world. Not only can sound achieve all those things, it can effect us on a deeper, more subconscious anatomical level. It can decrease or increase our pulse, change our blood pressure and even greyuce levels of anxiety and depression. Our heart reacts to sound-waves—in particular perpetual rhythms—which is why we feel relaxed at a 60 BPM (beats per minute) and feel uplifted anything higher than 100 BPM. When an innocent kids cartoon is played and the original audio is replaced with horror sounds, it changes the whole experience of that movie. For instance a Winnie the Pooh clip with horror soundscapes changes the whole meaning and we immediately start to interpret it differently. A scene where the animated teddybear cannot sleep first looks funny and innocent, but when the sounds change it turns into a nightmare. Or the opposite, even by turning off the sound of a horror movie, it becomes less scary. Replacing it with happy music, it removes the scary factor all the way and will seem like a comedy. Not only can sound change the mood of a movie, it also is capable of altering our vision in our dreams. In some cases forges a completely new scene. During a sleep experiment, a woman was monitogrey during her deep sleep. At first without any interference. After some time, they woke her up and asked her what she dreamed. It was a common description of mixed experienced she had the past few days. Then she went to sleep again. While she was asleep, the researchers turned on a soft soundscape consisting of seagull sounds and waves splashing onto a shore. Again they woke her up and she had a very relaxing and delighted dream. The third experiment she was sleeping while sirens and people screaming was played in the background. The sound was at maximum volume, at the edge of not waking her up. They woke her up and she told the researchers she was dreaming of being trapped in a fire and full of anxiety.

Do you enjoy the crispness and flavor of crisps? Of course the actual texture of them will tell you how fresh they are. But that is only a part of the experience. The other part is the crackling sound of the food. Another experiment showed that sound can change our taste. A group of blindfolded participants were asked to eat the exact same crisps wearing headphones. First they were eating them with actual crackling sound of it playing. Then they changed the audio to a more wet and moist sound of crisps. The participants all reacted similarly, stating that these crips were more old and less tasty. 21 These illusions take place, even if we are aware of that they are illusions. They are uncontrollable notions that I assume are partly processed by the old brain.

Do you enjoy the crispness and flavor of crisps? Of course the actual texture of them will tell you how fresh they are. But that is only a part of the experience. The other part is the crackling sound of the food. Another experiment showed that sound can change our taste. A group of blindfolded participants were asked to eat the exact same crisps wearing headphones. First they were eating them with actual crackling sound of it playing. Then they changed the audio to a more wet and moist sound of crisps. The participants all reacted similarly, stating that these crips were more old and less tasty. 21 These illusions take place, even if we are aware of that they are illusions. They are uncontrollable notions that I assume are partly processed by the old brain.

EXPERIMENTS

EXPERIMENTS

EXPERIMENTS

I have always worked with sound in my projects. This may explain my urge to write this text, and the question I raised: Can I still be a designer when I am blind? I always try to find the true meaning of sound in combination with visual material. They can go seemingly together but also break each other apart. It is a constant battle between the two. An unbroken controversy of who will get through. When they are not in sync, or when they convey different messages, your brain gets confused. Sound can be a very manipulative tool. You can simply change people’s mood, prevent crimes or make customers buy more expensive goods in your store.

I have always worked with sound in my projects. This may explain my urge to write this text, and the question I raised: Can I still be a designer when I am blind? I always try to find the true meaning of sound in combination with visual material. They can go seemingly together but also break each other apart. It is a constant battle between the two. An unbroken controversy of who will get through. When they are not in sync, or when they convey different messages, your brain gets confused. Sound can be a very manipulative tool. You can simply change people’s mood, prevent crimes or make customers buy more expensive goods in your store.

One project in particular, which could be a direct translation of this text is The Eye, The Ear (2016). It is an interactive website in a long-form format, it tests the dominance of your eyes compared to your ears. The visual content is having a dialogue with the auditory content. In some cases they are even having arguments. Sometimes purely biological, other times in a complete abstract way. The visual parts are displayed in short sentences or in imagery (the eye) on the screen, while the auditory part narrates or plays sounds (the ear). The narration is different from what is displayed on the screen. Usually this form of speech and narration have to be in sync in order to convey a clear message. When this is not the case, your brain will be confused. The brain then has to select where to focus on. While testing the website, something unexpected happened. Single words pronounced started to sound like the written word. Whereas when there were longer sentences, the audio took over. It made me, and other users unable to read the text on the screen. The first three words were legible, after that, the narration took over completely. Even with a drawing of an eye, where all the anatomical parts were shown clearly, could not compete with the narrated ear parts. fig. 11

One project in particular, which could be a direct translation of this text is The Eye, The Ear (2016). It is an interactive website in a long-form format, it tests the dominance of your eyes compared to your ears. The visual content is having a dialogue with the auditory content. In some cases they are even having arguments. Sometimes purely biological, other times in a complete abstract way. The visual parts are displayed in short sentences or in imagery (the eye) on the screen, while the auditory part narrates or plays sounds (the ear). The narration is different from what is displayed on the screen. Usually this form of speech and narration have to be in sync in order to convey a clear message. When this is not the case, your brain will be confused. The brain then has to select where to focus on. While testing the website, something unexpected happened. Single words pronounced started to sound like the written word. Whereas when there were longer sentences, the audio took over. It made me, and other users unable to read the text on the screen. The first three words were legible, after that, the narration took over completely. Even with a drawing of an eye, where all the anatomical parts were shown clearly, could not compete with the narrated ear parts.

figure 11

The Eye, The Ear, 2016

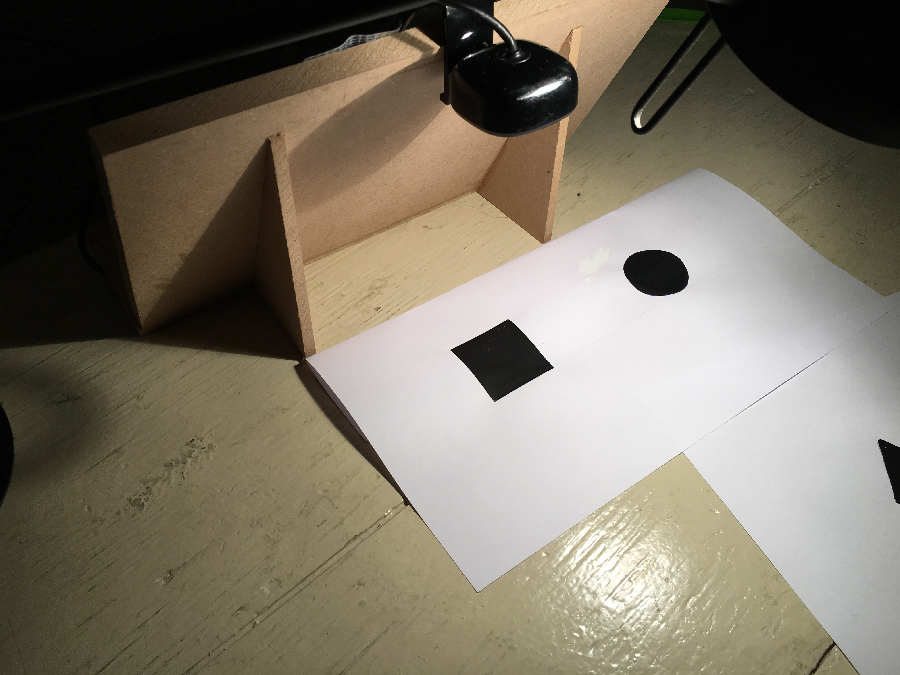

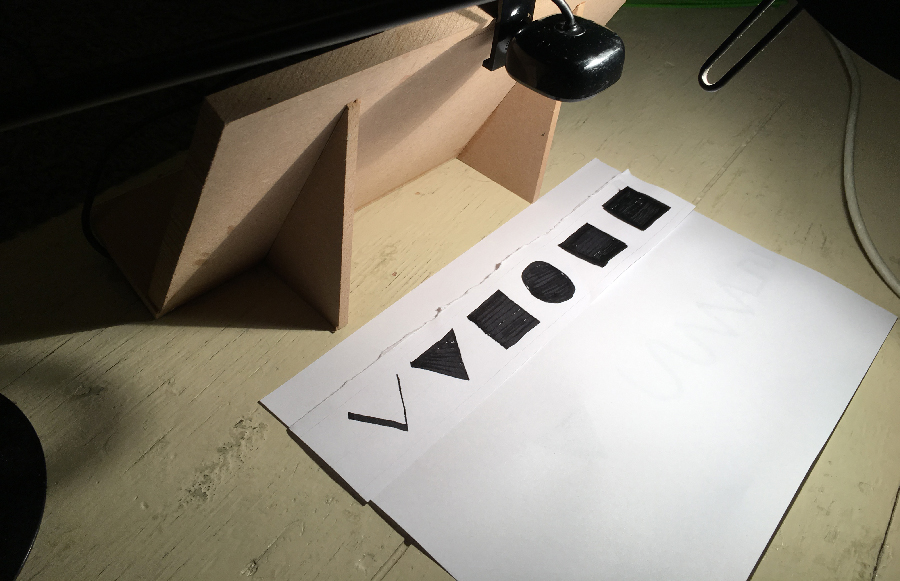

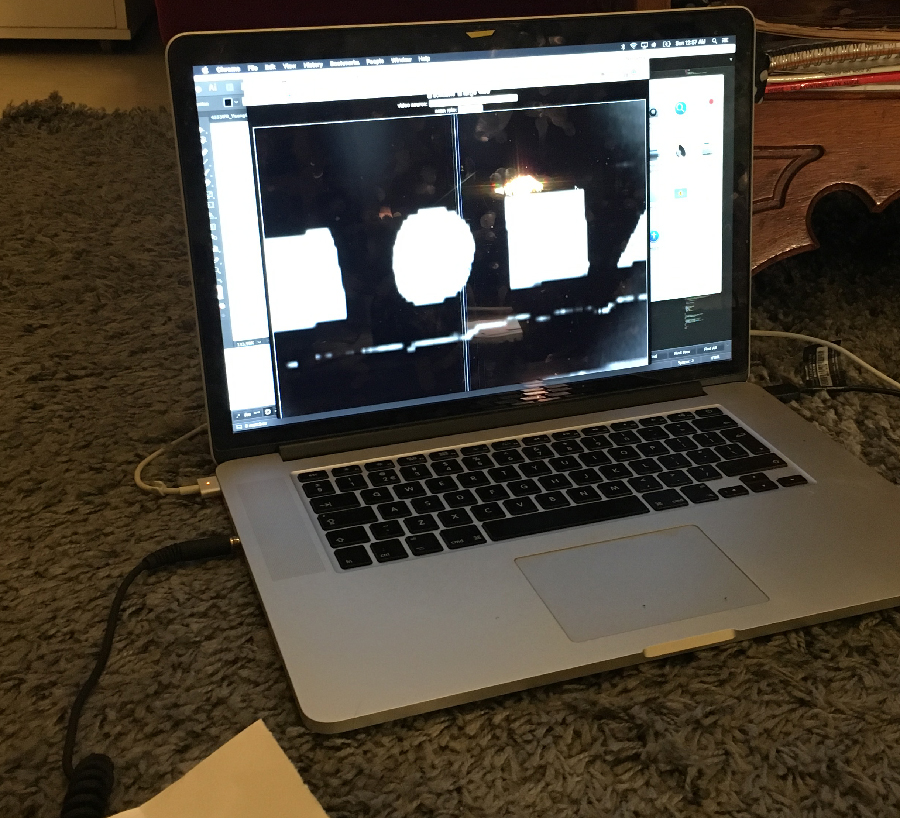

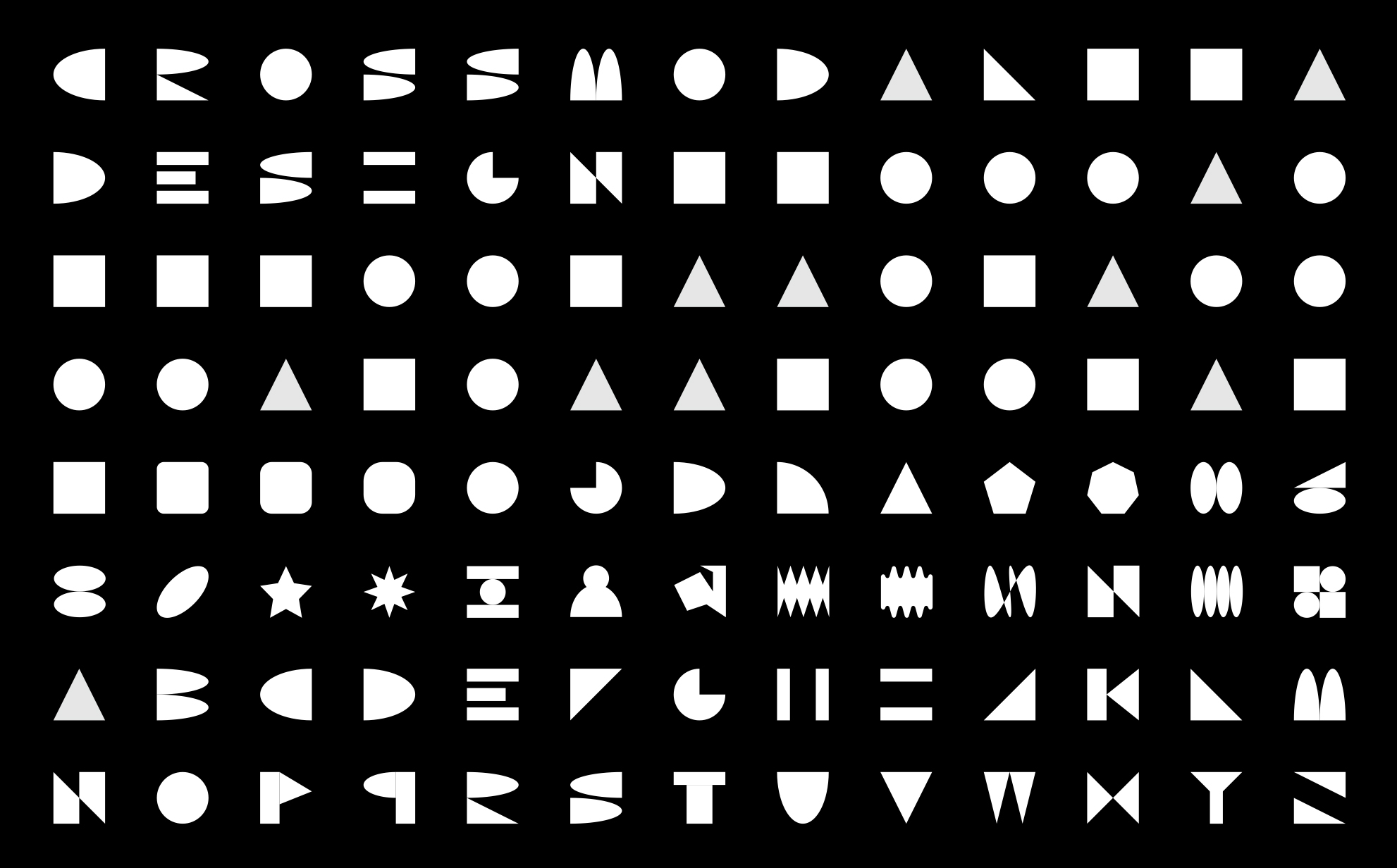

While doing research I got interested specifically in sensory substitution, which is a non-invasive technique. This means not involving the introduction of instruments into the body. This non-invasive technique can be used for circumventing the loss of one sense, by feeding its information through another channel. If one of your sense organs stops functioning, brain cells responsible for that sense remain intact. They are still in use and can adapt and learn to make other use of it. So in theory it should be possible to convert sensory data, say visual perception, into another sensory dataset, such as sound. There are many products available today that claim to help blind or deaf users by using a sense they still have. One in particular really intrigued me, The vOICe. 22 created by the Dutch scientist and researcher Peter Meijer. Most products cost thousands of euros. The vOICe is free, you only need a webcam, or preferably camera-glasses. fig. 12 Another plus is that it makes use of the senses a blind person still has and does not try to enhance that bit of sight that is left or try recall sight that may have never been there. This can be very problematic: Those who are born blind, have a very different visual system. Restoring vision is going to result in a very different, sometimes awful perceptual experience: They will have a visual stimuli overload inside the brain. Also, they will see black for the first time. Many people think blind people only see black, but this is not true, it is told to be very different. For instance, try looking at the back of your head. 23 But this does apply for the vOICe. It is a very simple concept: Images are converted into sound by scanning them from left to right while associating elevation with pitch and brightness with loudness and offers the experience of live camera views through image-to-sound renderings. This way objects and people can be recognized since each shape will have its own distinctive sound. A square object will sound solid and starts and ends abruptly. While a round object will sound more smooth, the waveform fades in and out. The same goes for the pitch, since the top of a round object or circle is higher, the pitch and volume will be at its highest point. Then it will fade out the same way. A triangular shape works the same way, but of course more abruptly then a circular shape. I found out there is also a web version available and I immediately went to try it out. I was first a bit skeptical because it sounded like garbled noise. But as soon as I held my webcam towards objects, towards a background which created a high contrast view, it started to sound like the shape. I got more curious and started to draw shapes on a sheet of paper. With a thick, black marker I drew a square, then a circle and lastly a triangle. I was amazed by how quickly I could recognize the sound affiliated by the silhouette of the shapes. Then I drew a whole range of those shapes randomly ordered. Blindfolded myself and started to scan the paper with my webcam. After some practicing, I could recognize all of them flawlessly. fig. 13 To make it more harder, I created them digitally, with more complex shapes. fig. 14

While doing research I got interested specifically in sensory substitution, which is a non-invasive technique. This means not involving the introduction of instruments into the body. This non-invasive technique can be used for circumventing the loss of one sense, by feeding its information through another channel. If one of your sense organs stops functioning, brain cells responsible for that sense remain intact. They are still in use and can adapt and learn to make other use of it. So in theory it should be possible to convert sensory data, say visual perception, into another sensory dataset, such as sound. There are many products available today that claim to help blind or deaf users by using a sense they still have. One in particular really intrigued me, The vOICe. 22 created by the Dutch scientist and researcher Peter Meijer. Most products cost thousands of euros. The vOICe is free, you only need a webcam, or preferably camera-glasses. Another plus is that it makes use of the senses a blind person still has and does not try to enhance that bit of sight that is left or try recall sight that may have never been there. This can be very problematic: Those who are born blind, have a very different visual system. Restoring vision is going to result in a very different, sometimes awful perceptual experience: They will have a visual stimuli overload inside the brain. Also, they will see black for the first time. Many people think blind people only see black, but this is not true, it is told to be very different. 23 But this does apply for the vOICe. It is a very simple concept: Images are converted into sound by scanning them from left to right while associating elevation with pitch and brightness with loudness and offers the experience of live camera views through image-to-sound renderings. This way objects and people can be recognized since each shape will have its own distinctive sound. A square object will sound solid and starts and ends abruptly. While a round object will sound more smooth, the waveform fades in and out. The same goes for the pitch, since the top of a round object or circle is higher, the pitch and volume will be at its highest point. Then it will fade out the same way. A triangular shape works the same way, but of course more abruptly then a circular shape. There is also a web version available and I immediately went to try it out. I was first a bit skeptical because it sounded like garbled noise. But as soon as I held my webcam towards objects, towards a background which created a high contrast view, it started to sound like the shape. I got more curious and started to draw shapes on a sheet of paper. With a thick, black marker I drew a square, then a circle and lastly a triangle. I was amazed by how quickly I could recognize the sound affiliated by the silhouette of the shapes. Then I drew a whole range of those shapes randomly ordered. Blindfolded myself and started to scan the paper with my webcam. After some practicing, I could recognize all of them flawlessly. To make it more harder, I created them digitally, with more complex shapes.

figure 12

Camera Glasses

figure 13

The vOICe test 1

figure 14

Digital Test Shapes

However, from a designers perspective, I saw this software more like a tool to preview your design or review changes. It does not tell me where I am—for instance on a screen. For that we might need a few steps in between. At that point I didn’t try to ‘design’ something yet. So I first wanted to know what it would be capable of in that sense. And I was surprised. I practiced for about a week scanning shapes with my webcam and started to get better and better at it. Then I got myself a few black, non-reflective foam-boards and prepared all kind of shapes, including those I was practicing with before. I printed them and cut out the triangular, rectangular and oval shapes, then attached the webcam under a coffee table that was just high enough to show the complete A3 sized board. With some fiddling around, I got a nice overview of the whole board, and set up the desired lightning.

However, from a designers perspective, I saw this software more like a tool to preview your design or review changes. It does not tell me where I am—for instance on a screen. For that we might need a few steps in between. At that point I didn’t try to ‘design’ something yet. So I first wanted to know what it would be capable of in that sense. And I was surprised. I practiced for about a week scanning shapes with my webcam and started to get better and better at it. Then I got myself a few black, non-reflective foam-boards and prepared all kind of shapes, including those I was practicing with before. I printed them and cut out the triangular, rectangular and oval shapes, then attached the webcam under a coffee table that was just high enough to show the complete A3 sized board. With some fiddling around, I got a nice overview of the whole board, and set up the desired lightning.

Then I turned on the vOICe. Since black means silence, only a little bit of sound bits where coming from my headphones. I put on my blindfolds, sat on the ground and get all my cut-out shapes together. This scene may have looked very clumsy, but I felt I was doing something new and important. While feeling with my right hand towards the table, I heard a noise. I was inside the viewport (virtual window) of the webcam. I started feeling the different shapes I had lying next to me and picked up a medium sized circle. I had no idea where I placed it on the board at first, but I learned that a lower sound means a lower position must mean that it is somewhere at the bottom of the board. The noise from the circle also started quite late in the sound sequence that refreshes every second. Meaning it was on the right sight of the board (it scans from left to right). I then grabbed a long rectangular shape and placed it on the board. Its sound was uprising, meaning it the left side of the shape was facing down and the other end facing upwards. This was very fascinating to hear all those changes and visualizing how they would have been placed in my visual perception. I tried to place the objects in a straight horizontal line, which is fairly easy when you have sight. But with this method you have to listen and turn the object slightly until you don’t hear an ascending music scale like sound anymore. While turning it started to sound more flat with less decrease in pitch. While moving around shapes and constantly checking the soundscape, I created some quite interesting compositions that I would have created very differently with using my eyes. fig. 15 Of course these basic shapes are just a start of this experiment. It cannot replace the work I would normally create. However, it is a matter of understanding the concept within its purest form. Despite the fact that it is possible to create such compositions without the use of vision, it was not feasible to do this without optic precaution. I could have never cut out these shapes—let alone produce them on the computer and print them out—without using my eyes. This would be fine if this process would only be needed once, but every time cutting out shapes would be too time consuming. Which would make my assumption of this software correct. The step in between was missing. My goal is to design something digital and convert it to an analog entity. The first step to do that is to create visual coordination of the screen.

Then I turned on the vOICe. Since black means silence, only a little bit of sound bits where coming from my headphones. I put on my blindfolds, sat on the ground and get all my cut-out shapes together. This scene may have looked very clumsy, but I felt I was doing something new and important. While feeling with my right hand towards the table, I heard a noise. I was inside the viewport (virtual window) of the webcam. I started feeling the different shapes I had lying next to me and picked up a medium sized circle. I had no idea where I placed it on the board at first, but I learned that a lower sound means a lower position must mean that it is somewhere at the bottom of the board. The noise from the circle also started quite late in the sound sequence that refreshes every second. Meaning it was on the right sight of the board (it scans from left to right). I then grabbed a long rectangular shape and placed it on the board. Its sound was uprising, meaning it the left side of the shape was facing down and the other end facing upwards. This was very fascinating to hear all those changes and visualizing how they would have been placed in my visual perception. I tried to place the objects in a straight horizontal line, which is fairly easy when you have sight. But with this method you have to listen and turn the object slightly until you don’t hear an ascending music scale like sound anymore. While turning it started to sound more flat with less decrease in pitch. While moving around shapes and constantly checking the soundscape, I created some quite interesting compositions that I would have created very differently with using my eyes. Of course these basic shapes are just a start of this experiment. It cannot replace the work I would normally create. However, it is a matter of understanding the concept within its purest form. Despite the fact that it is possible to create such compositions without the use of vision, it was not feasible to do this without optic precaution. I could have never cut out these shapes—let alone produce them on the computer and print them out—without using my eyes. This would be fine if this process would only be needed once, but every time cutting out shapes would be too time consuming. Which would make my assumption of this software correct. The step in between was missing. My goal is to design something digital and convert it to an analog entity. The first step to do that is to create visual coordination of the screen.

figure 15

Compositions made using the vOICe

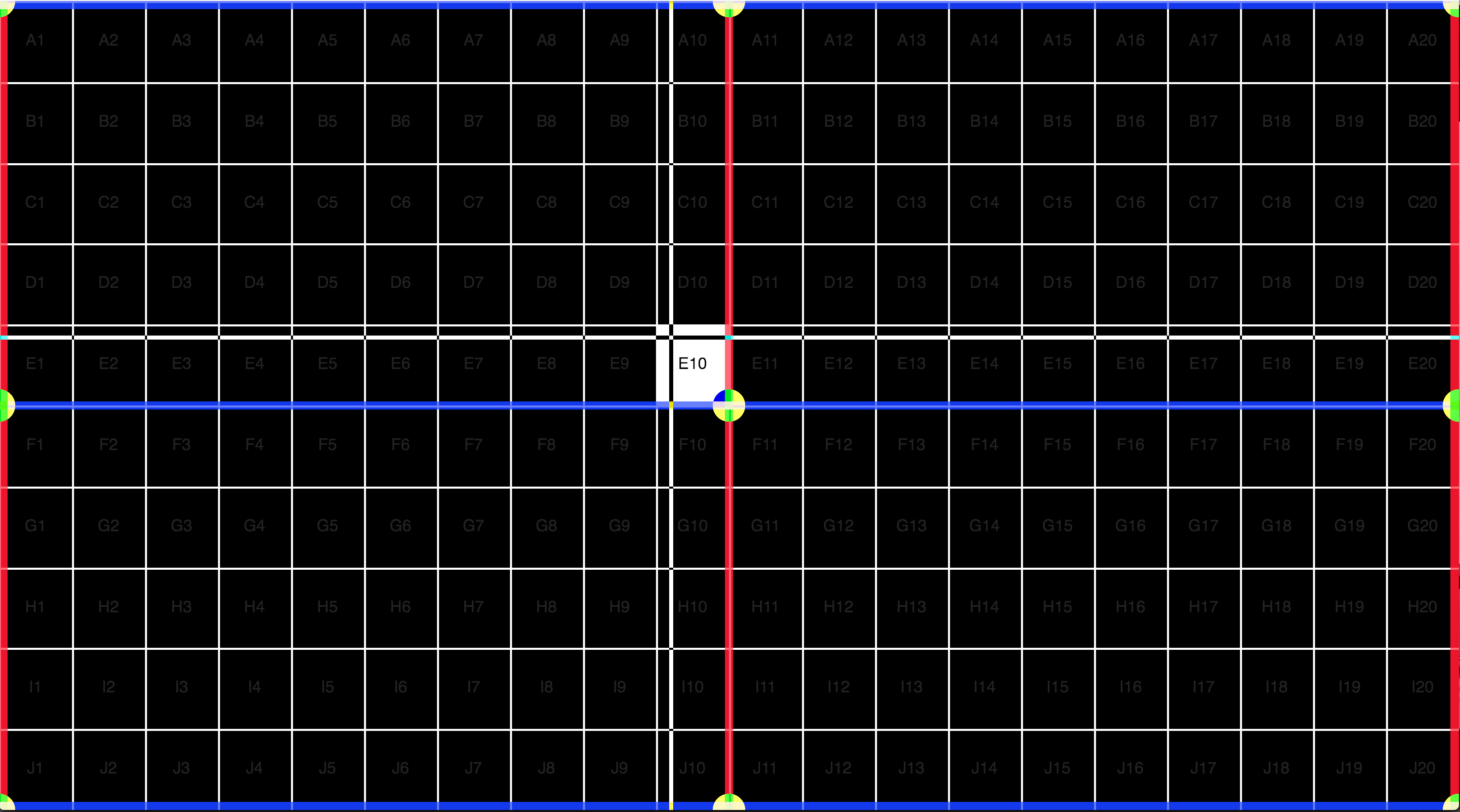

I created a webpage that is divided into a grid with equal dimensions. The grid is based on a X and Y axis on a 2:1 ratio. Where the horizontal boxes are from 1 to 20, meaning the middle is between 10 and 11. The vertical boxes are from A to J, meaning the middle line is between E and F. Those middle points are also indicated with a line that separates them. X center, and Y center. The user now can navigate by moving the cursor on the screen. A computer generated voice (text to speech) will tell you which point you are on at that moment. For instance J20. This means you are at the right-bottom corner. There are also center indicators that tell you if you are at the center of the whole viewport or at the top, top center, right, right center, left, left-center, etc. This setup requires further experimentation, it is not that precise and needs some improvements. Within the boxes there are no exact coordinates, it is a rough estimate of where the cursor is placed. fig. 16

I created a webpage that is divided into a grid with equal dimensions. The grid is based on a X and Y axis on a 2:1 ratio. Where the horizontal boxes are from 1 to 20, meaning the middle is between 10 and 11. The vertical boxes are from A to J, meaning the middle line is between E and F. Those middle points are also indicated with a line that separates them. X center, and Y center. The user now can navigate by moving the cursor on the screen. A computer generated voice (text to speech) will tell you which point you are on at that moment. For instance J20. This means you are at the right-bottom corner. There are also center indicators that tell you if you are at the center of the whole viewport or at the top, top center, right, right center, left, left-center, etc. This setup requires further experimentation, it is not that precise and needs some improvements. Within the boxes there are no exact coordinates, it is a rough estimate of where the cursor is placed.

figure 16

Grid wayfinding test

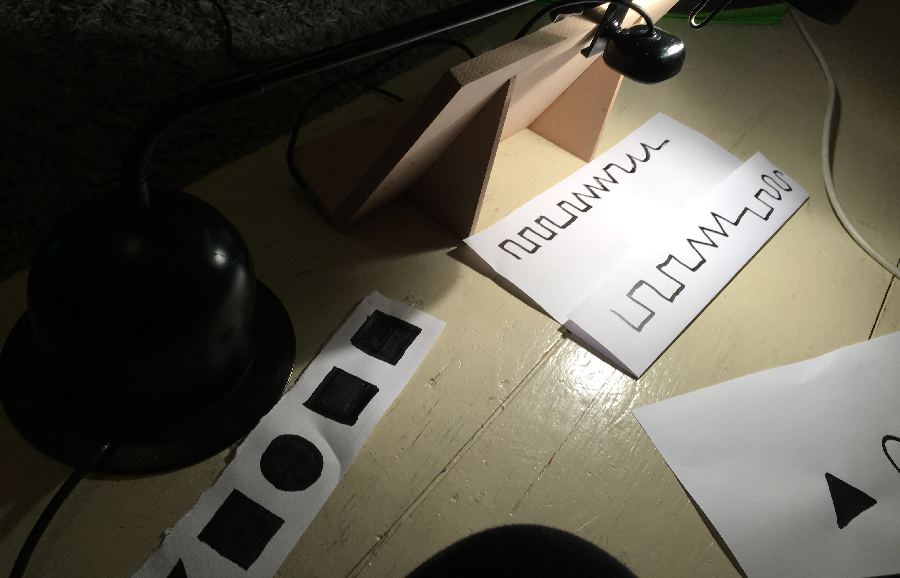

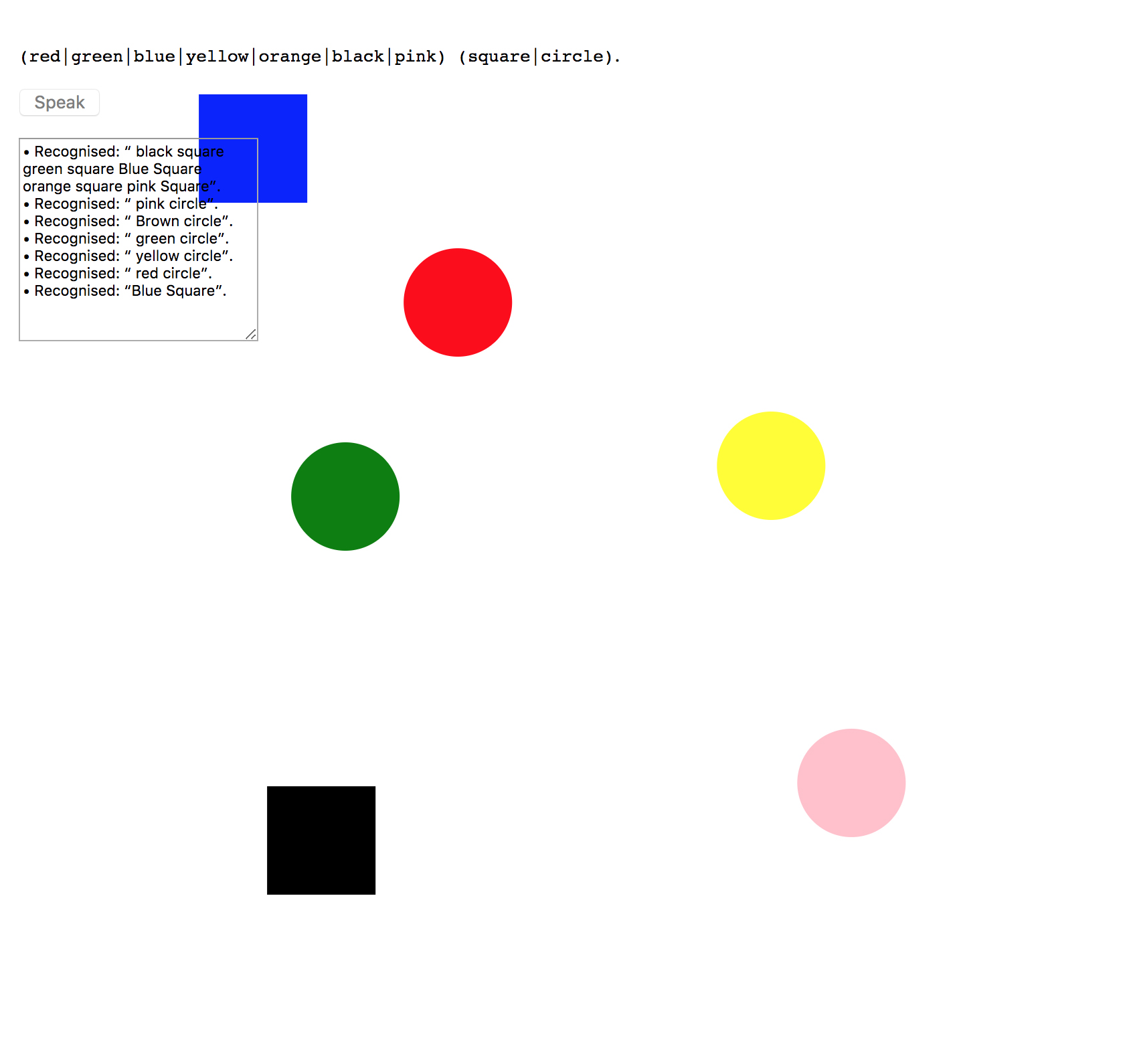

The third tool I want to experiment with is a web-speech API (Application programming interface). It comes in many forms and since 2012 it became a web standard. The speech recognition interface is the scripted for controlling a given recognition, either a function or an answer spoken by the computer generated voice. It is similar to the grid based experiment but it now uses user voice input instead of computer output. With this technique I was able to generate shapes with the use of my voice. When the user points the cursor towards any place within the browser window, he is able to specify what shape he want to have there. For instance when the user pronounces ‘black square’ it will generate a black square at the same position as the cursor. These shapes are made out of anchor points, thus vector images. This way they could be resized to any extent. Right now there are only options to choose between a square or a circle and a range of 10 colors. However this technique has much more potential and is able to generate more complex tasks and can come a step closer to designing goods we are used to. fig. 17

The third tool I want to experiment with is a web-speech API (Application programming interface). It comes in many forms and since 2012 it became a web standard. The speech recognition interface is the scripted for controlling a given recognition, either a function or an answer spoken by the computer generated voice. It is similar to the grid based experiment but it now uses user voice input instead of computer output. With this technique I was able to generate shapes with the use of my voice. When the user points the cursor towards any place within the browser window, he is able to specify what shape he want to have there. For instance when the user pronounces ‘black square’ it will generate a black square at the same position as the cursor. These shapes are made out of anchor points, thus vector images. This way they could be resized to any extent. Right now there are only options to choose between a square or a circle and a range of 10 colors. However this technique has much more potential and is able to generate more complex tasks and can come a step closer to designing goods we are used to.

figure 16

Web speech API colored shapes test

So in theory, if I would combine those three techniques, I would be able to design something digitally, exclusively with my ears, no peeking allowed. The vOICe to ‘see’ the outcome and progress, text to speech for coordination and web-speech API for creating the outcome with verbal instructions.

So in theory, if I would combine those three techniques, I would be able to design something digitally, exclusively with my ears, no peeking allowed. The vOICe to ‘see’ the outcome and progress, text to speech for coordination and web-speech API for creating the outcome with verbal instructions.

CONCLUSION

CONCLUSION

CONCLUSION

Over the past decades, we have demanded more and more visual stimuli, and we are receiving them, too. Paradoxically this visual overload might cause us to see less. As I have shown in this thesis, seeing has become very important to us, to the extent that we almost force the blind to see again. We design everything to please our craving marbles and tend to forget about the other senses. What we see is far from reality and is, moreover, only a fraction of our perception. The hierarchy of the senses has a different order, and different components across the world, it is not a given. I think there should not be a sensory hierarchy at all. By doing these experiments, my visual consciousness has changed. I started examining objects more precisely and imagine what they would sound like, a more constructive, conscious way of looking. Although they are not capable of replacing our designer-eyes yet, I have proven that there is a way. Despite the fact that technology has come a long way, blindness is still not 100% treatable, for that reason, non-invasive solutions might be more applicable. ‘Seeing is an advantage, not a necessity. Blindness is a state of mind, more than a sensory impairment. People who have the biological ability of sight but still don’t see, could perhaps be called blind.’ 24

Over the past decades, we have demanded more and more visual stimuli, and we are receiving them, too. Paradoxically this visual overload might cause us to see less. As I have shown in this thesis, seeing has become very important to us, to the extent that we almost force the blind to see again. We design everything to please our craving marbles and tend to forget about the other senses. What we see is far from reality and is, moreover, only a fraction of our perception. The hierarchy of the senses has a different order, and different components across the world, it is not a given. I think there should not be a sensory hierarchy at all. By doing these experiments, my visual consciousness has changed. I started examining objects more precisely and imagine what they would sound like, a more constructive, conscious way of looking. Although they are not capable of replacing our designer-eyes yet, I have proven that there is a way. Despite the fact that technology has come a long way, blindness is still not 100% treatable, for that reason, non-invasive solutions might be more applicable. ‘Seeing is an advantage, not a necessity. Blindness is a state of mind, more than a sensory impairment. People who have the biological ability of sight but still don’t see, could perhaps be called blind.’ 24